AWS re:Invent: Apache Kafka takeaways

What you missed, from MSK migration to security in the cloud

If anyone's ever been to AWS re:Invent in Vegas before, you'll know it's a crazy ride.

Unfortunately this year it had to be hosted virtually (at least we have cleaner consciences and healthier wallets).

But the high quality of content hadn't changed.

We've been binging on sessions ‘til the bitter end (it officially ended Friday). So for our community, here is a summary of a few talks related to Apache Kafka.

How New Relic is migrating its Apache Kafka cluster to Amazon MSK

Anyone running a large Kafka cluster will be able to relate to New Relic's story.

They've been running Kafka for years to collect data from their customers. This feeds a data lake that underpins their SaaS observability services.

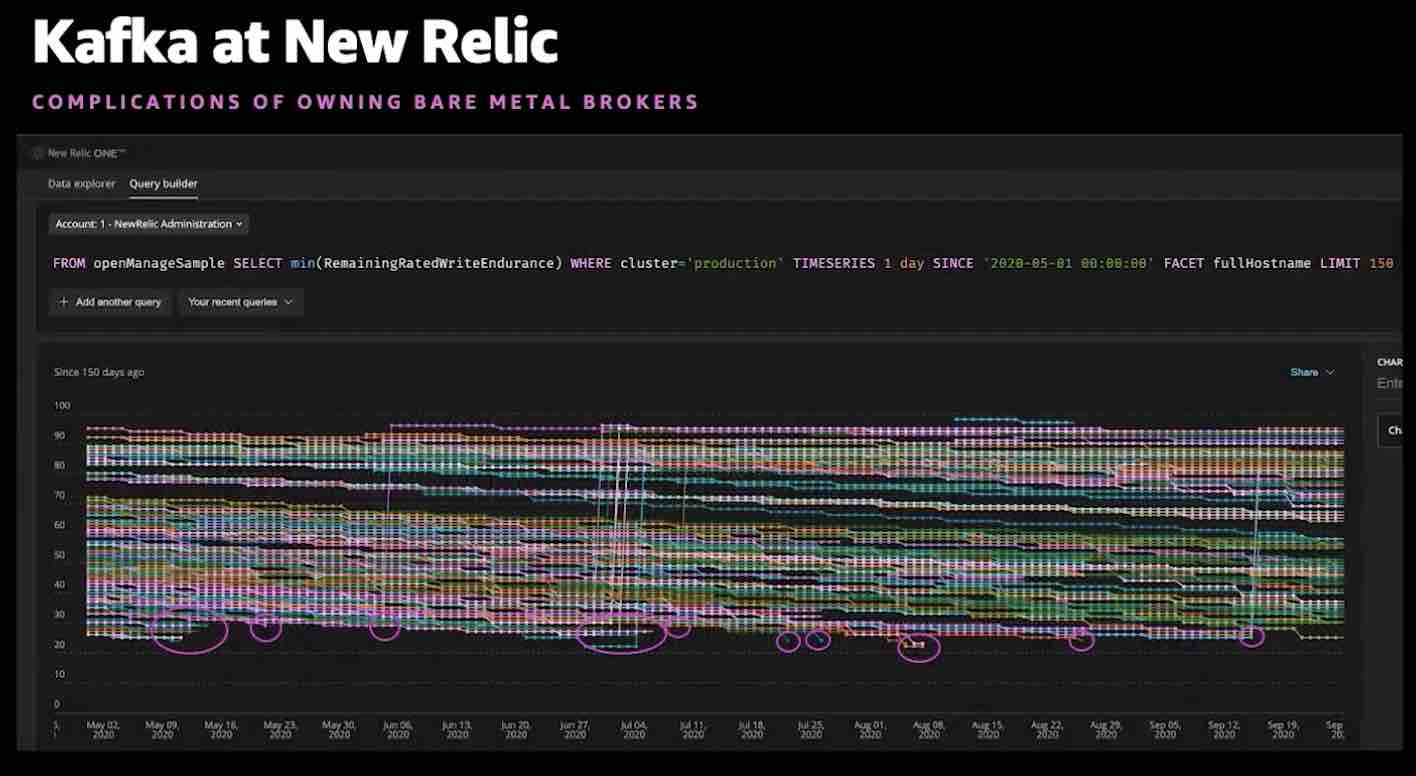

But their cluster became a monster to manage. It was processing 40GB/sec and their operations team felt every single GB, with huge complexity and costs to maintain the environment.

Being on a single cluster, their blast radius was huge. An outage they had in 2018 highlighted the level of risk in this approach..

It meant that extra care needed to be taken on the environment when it came to change requests. And this was impacting their ability to develop services for their customers.

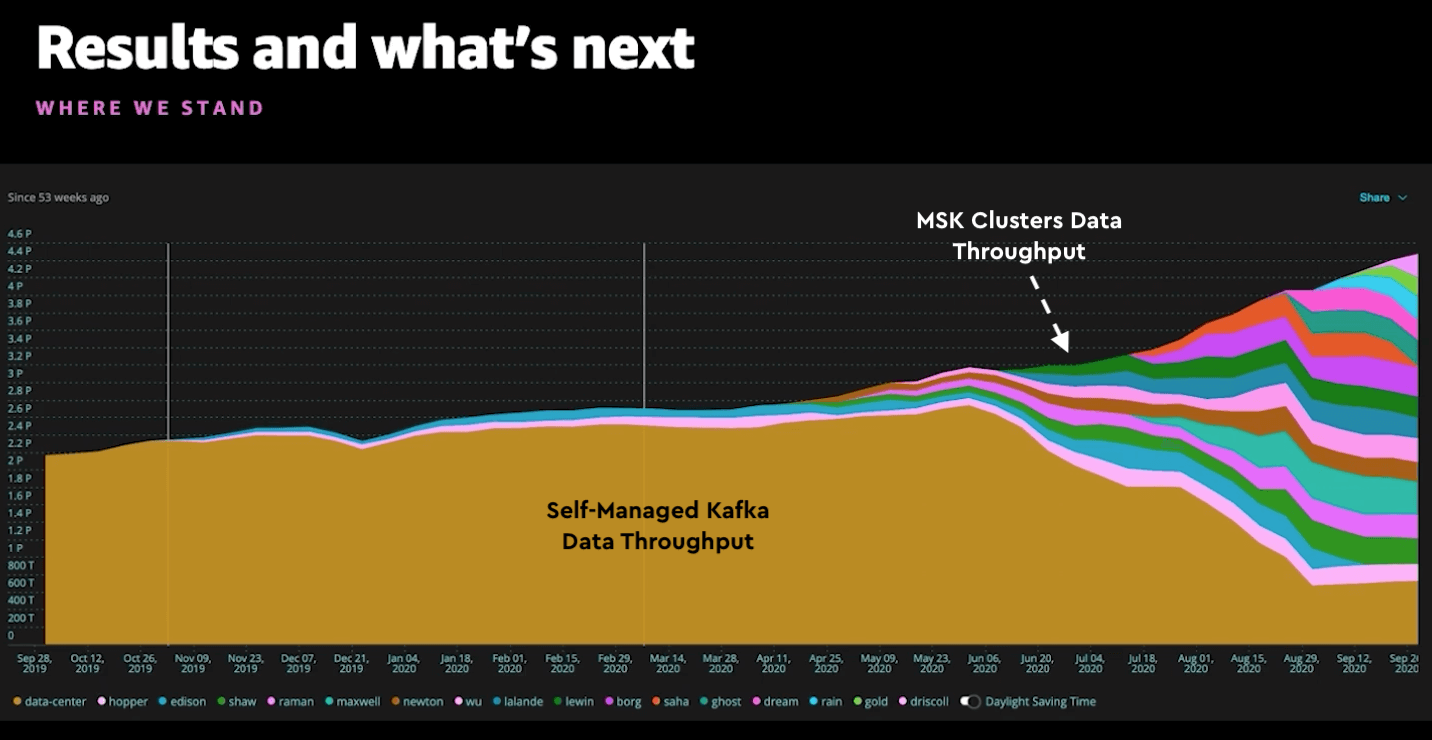

They talk through their approach to migrating to a large number of small managed streaming services for Apache Kafka (MSK) clusters. But moving to MSK wasn't just a lift-and-shift.

For example, the hardware profile of MSK is different from their self-managed environment. Their disk throughput is now far lower,. but this is compensated by having more flexibility in how they can attach storage with EBS volumes. Especially useful when they have to rebalance data across brokers.

They also go through the enhancements they've been working with the AWS product team. This includes exposing more infrastructure JMX metrics through MSK's Open Monitoring framework. And a "Customer Canary" feature to inject synthetic events into a topic to detect when a broker may have an issue.

In terms of results for New Relic, they explain how breaking up their self-managed monolith Kafka cluster into small MSK clusters has reshaped how much data they are able to process, driving the growth of their Kafka adoption and removing performance bottlenecks. They are able to release new features to their customers more quickly.

Guide to Apache Kafka replication and migration with Amazon MSK

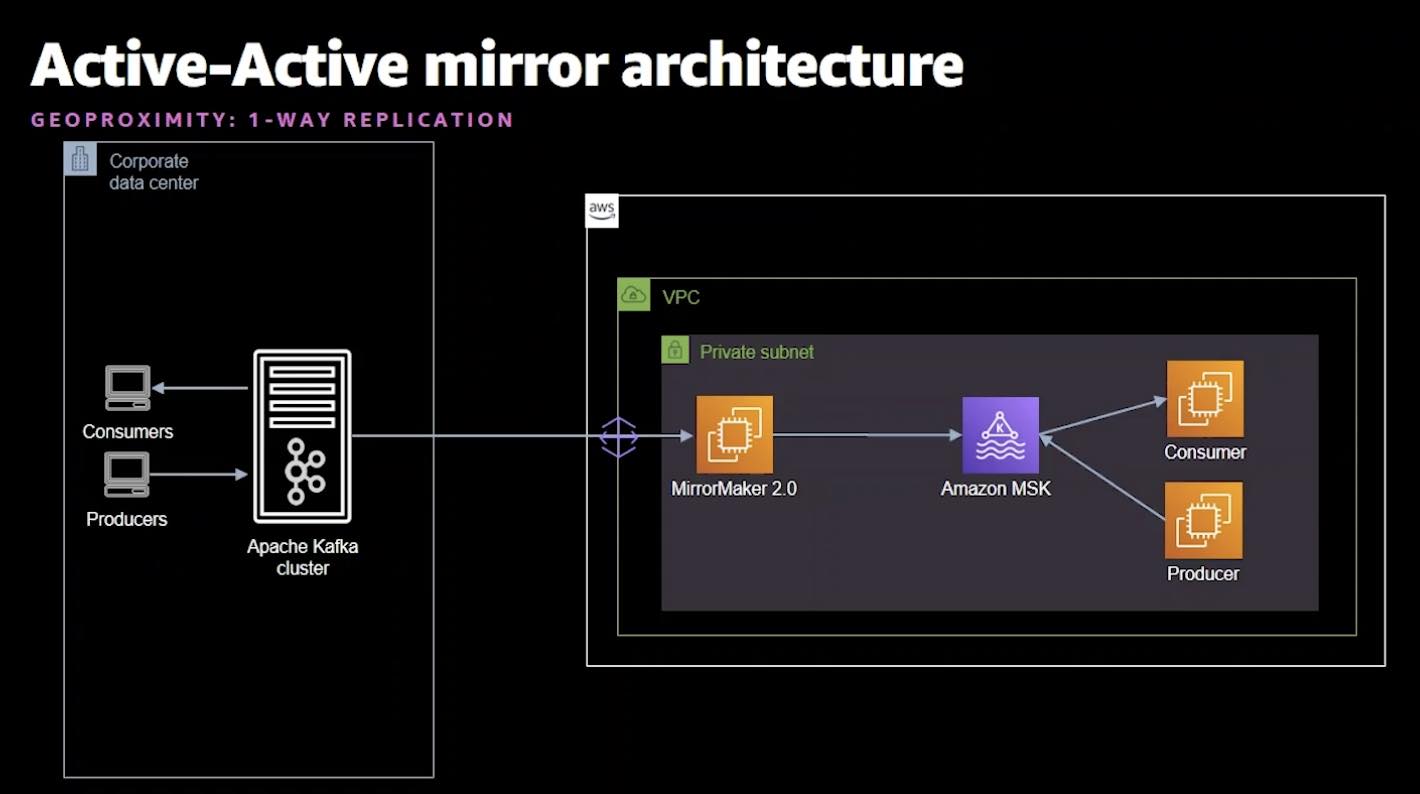

New Relic touched on their approach to migrating to MSK. This talk from the AWS team digs deeper on this topic and focuses on the use of MirrorMaker 2.

They give a number of different architectural patterns to migrate data across Kafka clusters. Everything from doing a migration to Kafka to configuring HA and DR including active/active, active/passive, replication for geo-locality and multi-cluster to centralized cluster setups.

They also provide some good info on the anatomy of MirrorMaker.

They briefly talk about using Flink for replication. Although this would only be suitable in a limited number of circumstances.

If you watch the New Relic talk, they happen to only use Mirror Maker for a limited part of their migration. Instead, most of the migration was done having developed their own routing layer.

Finally, the talk provides some good information and resources for how to size your MSK environment when moving from self-managed.

Confluent also presented using Cluster Linking to replicate data in their talk. Although this is only available if you're a commercial Confluent customer.

How Goldman Sachs uses an Amazon MSK backbone for its Transaction Banking Platform

Security at scale: How Goldman Sachs manages network and access control

Once you're on MSK or you're running Kafka in AWS, then you need to secure it.

The team at Goldman Sachs provide great information on how they're building a transaction banking-as-a-service platform on MSK and how they've addressed the security challenges.

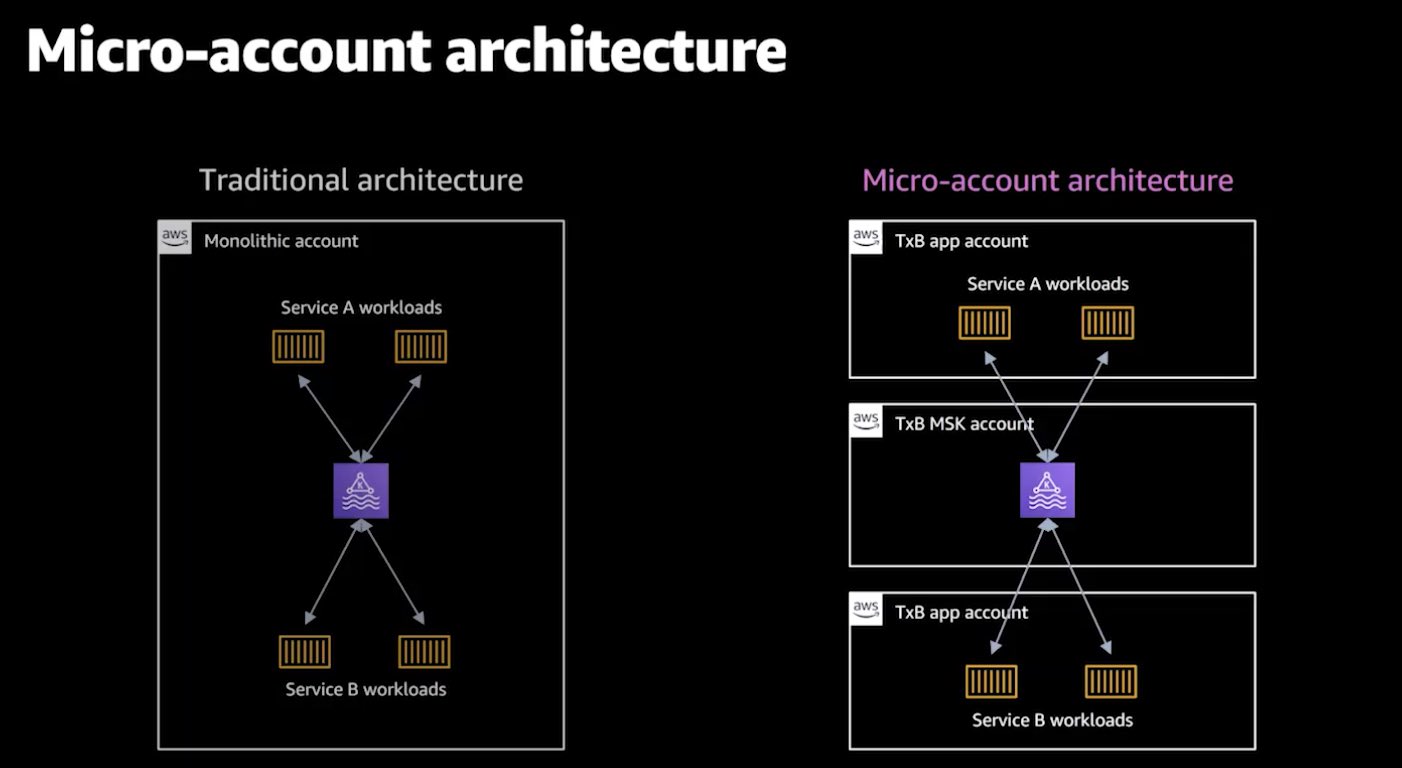

One example of an architectural pattern they've adopted is segregating their infrastructure between their MSK clusters and applications and into different micro AWS accounts.

Then, instead of defining VPC Peering CIDR ranges to connect the different environments, a more secure and operational way was to create Private Links to a Network Load Balancer in front of each MSK Broker. Incidentally, to learn more about best practices for creating private links across micro-accounts, you can also checkout the talk VPC endpoints & PrivateLink: Optimize for security, cost & operations.

To address the challenge of the clients having a different DNS name and domain in one VPC to another, they created CName records in Route53 in the client VPC to trick the clients to communicate with the assigned brokers and partitions.

Managing so many VPC Private Link Endpoints across hundreds of AWS accounts is managed by Terraform, which reads metadata related to each micro service from an S3 bucket.

They also go through how they've set up mutual TLS with certificates only valid for a few days, explaining how they've built a system with AWS Lambda that easily provisions keys, storing them in KMS for Kafka clients to be able to easily access.

Not a talk but an important announcement from the AWS team: They are now providing their own Schema Registry. It’s a new serverless service that manages your AVRO schemas across your different applications and integrates with AWS services including their MSK, Kinesis, Flink, Lambda services.

This is one less headache over managing a distributed technology yourself.

Simplify your life and data operations using Lenses.io to operate your AWS MSK environments. Explore more at lenses.io/cloud/aws-msk.