Adamos Loizou

Lenses 5.1 - A 1st class ticket to be event-driven in AWS

Improved support for building event-driven apps in AWS with the industry’s only SQL engine for data observability & processing backed by AWS Glue Schema Registry

Adamos Loizou

Hello again.

We strive to improve the productivity of developers building event-driven applications on the technology choices that best fit your organization.

AWS continues to be a real powerhorse for our customers. Not just for running the workloads, but in supporting them with their native services: MSK Kafka, MSK Connect and now increasingly Glue Schema Registry.

This is bringing a strong alternative to Confluent and their Kafka infrastructure offerings.

That’s why we’ve focused this release on improving the ride as a developer working with native AWS services.

Let’s take a look….

Confluent have done a great job at promoting their schema registry to the Kafka community.

Others have followed, developing their own Schema Registries adopting Confluent-compatible APIs. This includes Karapace from Aiven and Apicurio from Redhat.

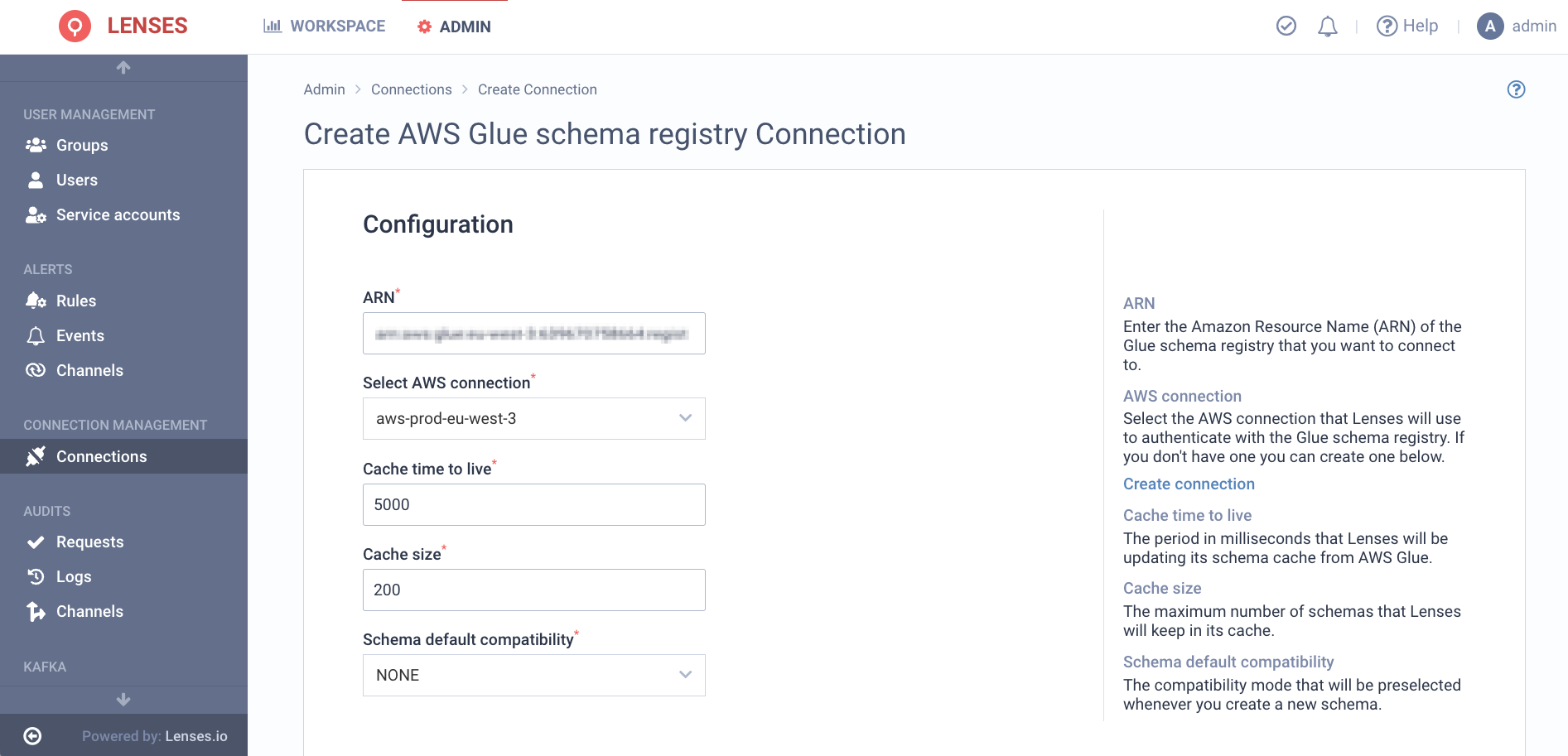

AWS Glue Schema Registry is different. Released a few years ago, their APIs differ in order to integrate better with some of their other services.

This required quite some engineering on our end.

We’re delighted to officially support Glue Schema Registry for Avro, with Protobuf to be included in Lenses 5.2.

This brings the full power of SQL for Kafka for those adopting Glue Schemas. The only solution in the industry to do so.

The same SQL engine supports two use cases:

SQL for Data Observability - invaluable for troubleshooting problems in a microservice or quick data analysis

SQL for Streaming Processing - Join, filter, aggregate, reshape or otherwise transform streaming data without a line of code in a fully-wrapped developer experience.

Check out Andrew’s blog on managing schemas in Glue.

Lenses has been and remains the biggest contributor to open-source Kafka connectors.

We’ve worked hard with enterprise customers to make our S3 Source and Sink Connectors the leading S3 connector for Apache Kafka in the market.

We’ve noticed the dataflows between Kafka and S3 becoming mission-critical. This includes for the backup/restore of topics to/from S3. And many do not want to be forced to adopt Confluent’s connector.

Whilst we remain committed to keeping the connectors open-source (Apache 2), the importance of these flows means that organizations require extra level of assurance, roadmap participation as well as support & security incident response SLAs.

We are therefore increasing our investment and offering enterprise support for S3 to our customers. You’ll also notice a further improved experience working with S3 in our later 5.x releases.

Now Lenses offers the only alternative to Confluent for mission-critical flows to S3.

Our customers look to us to help them meet the highest levels of enterprise security.

The Kafka Connect framework, whilst widely popular, can easily lead to poor security practices if not careful, including secrets being stored in clear text in config.

The Lenses granular RBAC for managing connectors as well as auditing gives customers the confidence to give self-service access for developers to deploy connectors.

But this didn’t address the problems with secrets.

Like our S3 connectors, our open source Secret Provider plugin has been wildly popular to ensure secrets can be managed in a secret vault. This includes dealing with the rotation of secrets and ensuring the connectors keep running.

Whilst remaining on an Apache 2 license, this too we are now offering enterprise support to our customers and increasing our R&D investment.

This is now fully supported for secret managers including Hashicorp Vault and AWS Secret Manager.

For full details & how-to guide to the Secret Provider, see David's blog.

We never cease to be amazed by the speed at which our customers are increasing their adoption of event-driven apps on Kafka.

They are the ones who push us to find ways to make their working lives easier and more productive.

Based on popular demand from our customers, you’ll therefore notice a number of UX improvements including introducing search bars in all of our tables and changes to how we render metadata for events, valuable when rendering particularly large events.

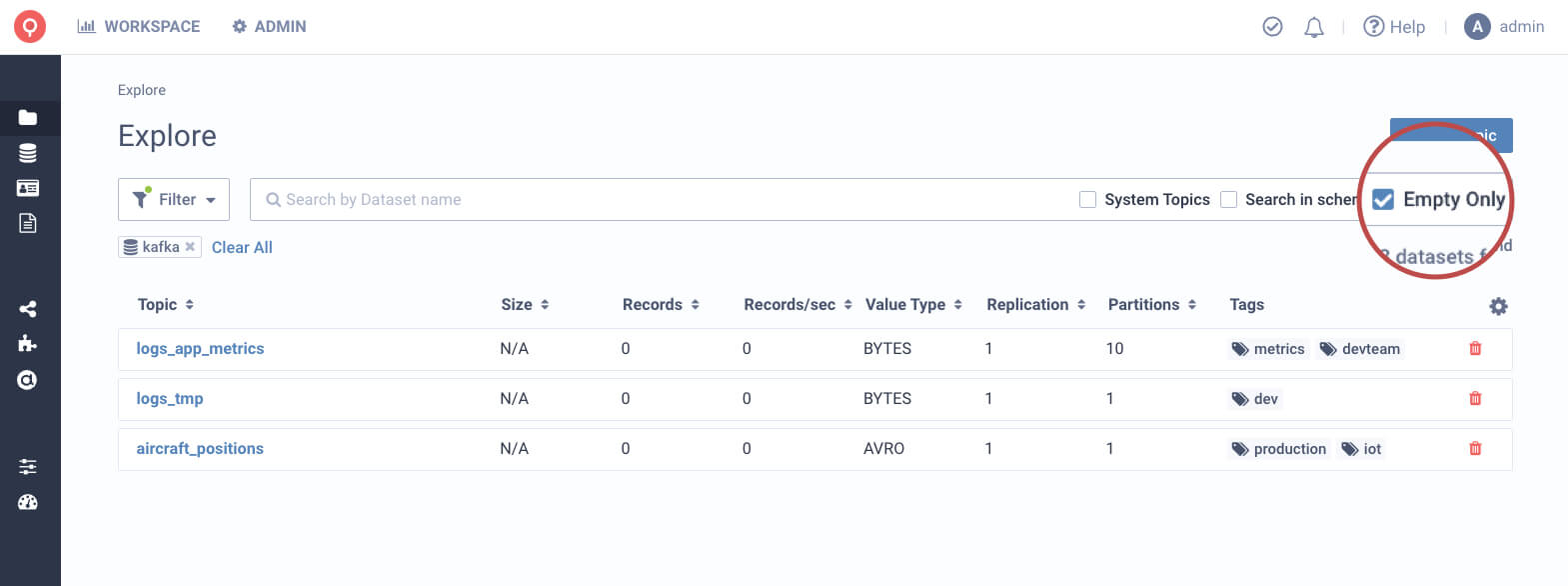

In order to keep your cluster clean, Lenses has expanded its datasets API to allow for filtering of empty topics so you can quickly identify and remove topics cluttering up your brokers. This is supported in the UI and, of course, the CLI for automation.

For the command line savvy among us: Lenses’ companion known as the CLI has received shiny new features to even better work with Lenses.

Kafka topics can now be listed while filtering by topic compaction status and emptiness. This is supported through the dataset command.

Connections like Kafka and Schema-registry can be created, tested and updated through the CLI. For that, crafting JSON blobs is not needed anymore: for every connection property a corresponding flag has been built into the CLI.

The connections are found under the connections subcommand.

Extra control knobs have been added to the Helm chart for more control over Lenses deployments in Kubernetes.

The security context of containers is configurable, as well as the DNS policy and config. Lastly, annotations can be provided on the deployment’s pod.

We're pleased to announce that our Kafka Docker Box, which is a widely-used and complimentary tool for developers who create event-driven applications, is now compatible with ARM64 processors.

This local Kafka environment, is equipped with all of Lenses' features at no cost, providing engineers with an enhanced development experience and increased productivity.

Previously, due to the bundled applications, it could not be utilized on ARM processors —even via x86 emulation—, which are becoming increasingly popular across various platforms, from AWS Gravitron, to Macbooks, to Raspberry Pi.

With Lenses 5.1 we add native support for ARM64 processors to our Box.

You can check the README here. Full details on accessing the release across different deployments can be found in our docs. Any questions? Ask Marios in our new community answers site or join our Slack Community and ask us directly.

But the journey doesn’t stop here so buckle in because the next stop is 5.2 and it’s coming fast…

In the meantime, we hope you enjoy the release.

Happy streaming.