What is Apache Kafka?

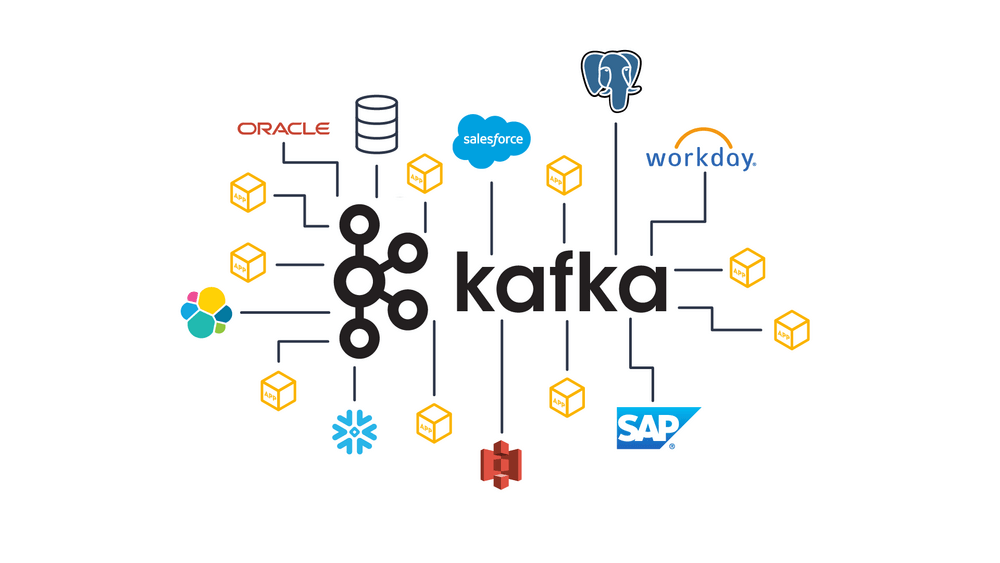

Apache Kafka is a distributed messaging system used for stream processing, and is used by thousands of organizations to power real-time applications.

Let's look at the benefits of Apache Kafka, how it differs from other related data technologies, how it works and how it's used.

Why use Apache Kafka?

Kafka helps to modernize business processes by providing the infrastructure to deliver real-time data and timely experiences to consumers - with use cases ranging from reducing mainframe costs and order fulfilment to fraud detection and risk calculation.

Data integration

- Reducing mainframe costs

- Integrating market data

- Security analytics pipelines

AI & data products

- Risk calculation engine

- Payment fraud detection

- Churn prediction

Software applications

- Mobile banking

- Financial roboadvisor chatbot

- Digital wallet

Apache Kafka vs. RabbitMQ

| Characteristic | Apache Kafka | RabbitMQ |

|---|---|---|

| Architecture | Kafka uses a hybrid approach of messaging queue and publish subscribe | RabbitMQ uses a messaging queue approach |

| Scalability | Kafka allows distributed partitions across different servers | Increase the number of consumers to the queue to scale out processing across competing consumers |

| Message retention | Policy-based, with the retention window configurable by the user - for example messages can be stored for two days. | Acknowledgement-based: messages are deleted as they are consumed |

| Multiple consumers | Multiple consumers can subscribe to the same topic, because Kafka allows the same message to be replayed for a given time window. | Messages are deleted as they are consumed, so multiple consumers can't receive the same message. |

Benefits of Apache Kafka for organizations

Apache Kafka is a powerful streaming technology when harnessed in the right way, but it also presents a steep learning curve and productivity difficulties for engineering teams when they start to drive adoption beyond a POC.

This means developers need well-governed self-service and data access, observability, security and building data pipelines (stream processing).