Andrew Stevenson

Top Kafka Connect Security & Compliance Controls

An A to Z of data security compliance for Kafka Connect, from ACL management to RBAC.

Andrew Stevenson

If you’re moving data in and out of Kafka, chances are you’re using Apache Kafka Connect. The strength of the Kafka community means we have a large catalog of available connectors for hundreds of different technologies, helping to accelerate the integration of Kafka with 3rd party systems.

As we often see in the world of open source, Kafka’s wide adoption across the community doesn’t make the technology easier to manage. In fact, it would be fair to say there are a number of challenges and design faults that we need to live to accept for the time being.

Probably the biggest challenge here is to put good governance measures in place for Kafka Connect, which does two things:

Provides healthy & productive data operations for a faster time-to-integrate

Helps you meet IT and data compliance requirements to meet audits and get your projects into production.

The good news is you can work around many of these challenges and provide Kafka as a Service within your organization using DataOps practices. Here we break down some of the biggest areas of compliance you may need to meet these best practices including:

Confidentiality

Availability

Authorization

Authentication

Isolation & multi-tenancy

Audit Reporting

Real-time monitoring

Let’s have a look.

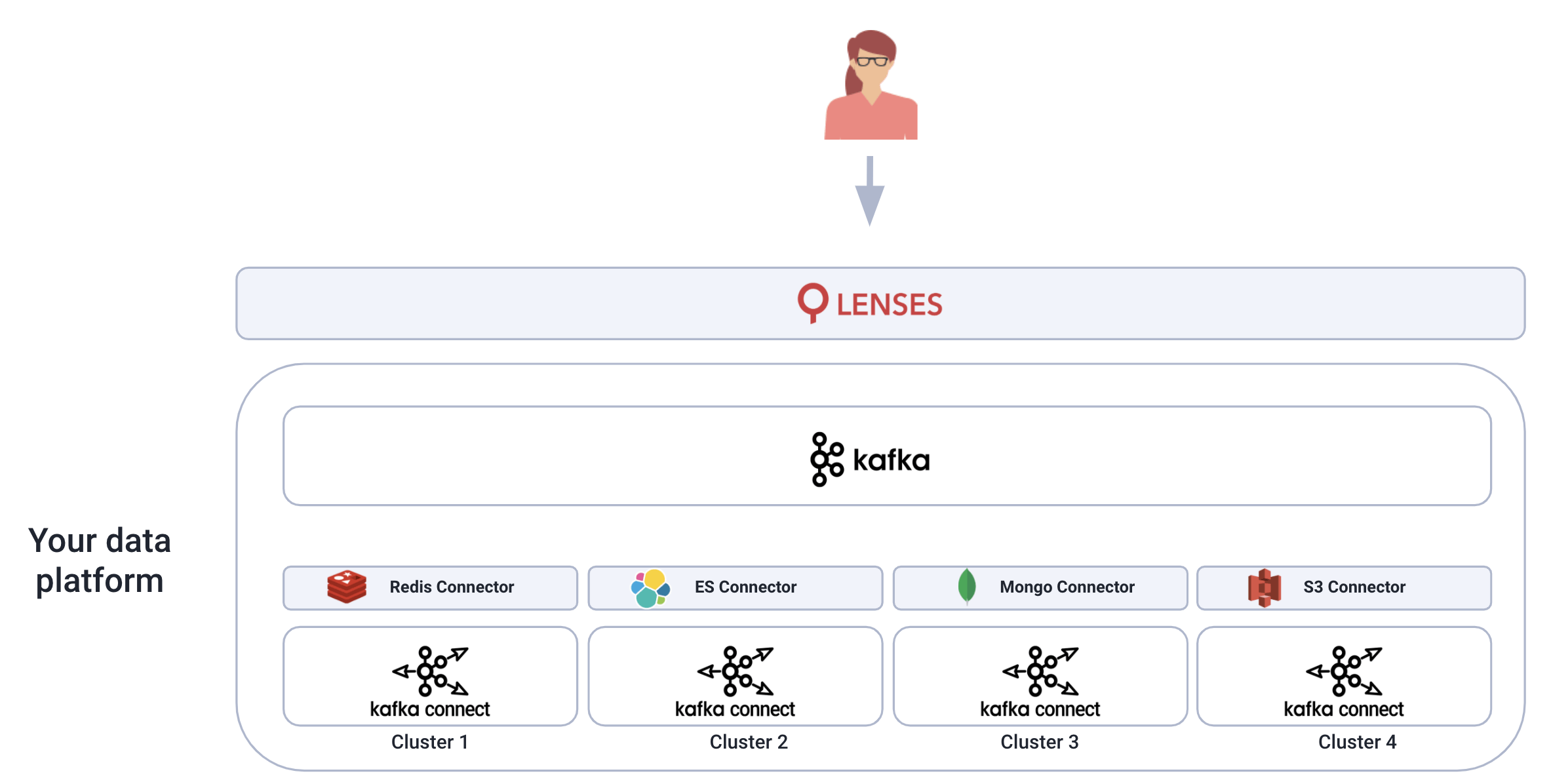

Applying good governance to Kafka Connect will require running connectors and pipelines across isolated clusters. This is both to protect availability of data and confidentiality.

Whilst deploying one connector, you risk others within the cluster restarting and triggering a rebalance, temporarily interrupting the service. This may be particularly painful whilst collecting data from a source with no guaranteed delivery (such as a UDP port).

Certain types of connectors may also consume heavy disk/memory or compute resources. Here we run the risk of impacting the performance or availability of other connectors housed on the cluster.

Of course your security policies may also dictate the isolation of connectors across different clusters. So a best practice would be to run them in complete isolation.

ACL policies allow you to authorize principles (in the form of clients) to Kafka resources, including topics. They're an absolute must.

The question is how can ACLs themselves be managed and who should have the ability to operate them?

Here there is a significant gap in native Kafka.

If you're not already using something like Ranger to manage your ACLs, they can be operated and through Lenses and secured with RBAC and auditing.

Service disruption can occur through a malicious or hapless user action. A user could interrupt a flow by compromising one connector, or leak data by deploying another connector.

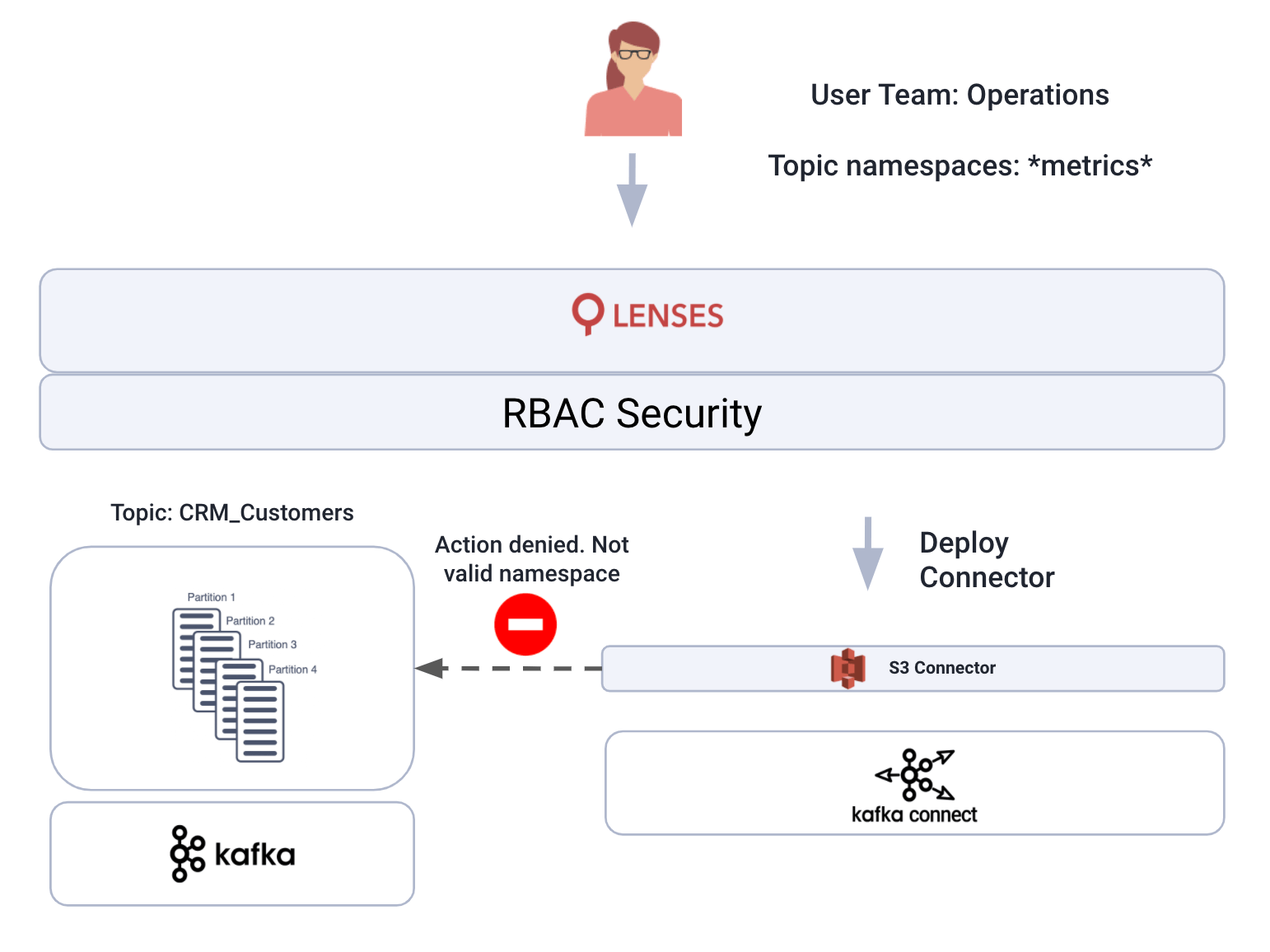

Privileges to operate connectors should be assigned, not assumed. Kafka Connect doesn’t provide any role-based security or auditing.

You could control what connectors are deployed as part of your CI/CD workflows, but this isn’t always the best way of managing connectors since users require constant feedback loops and error handling when deploying them.

At Lenses we’ve built a security model over Kafka Connect. It’s the same model that provides data access to relevant users.

At its most basic, you can assign permissions to operate Kafka Connect.

Then, based on topic namespaces users may be entitled to perform certain actions on a topic level. The available operations extend beyond data access and assign the ability to manage connectors with the topic as the source or sink.

For example, if you are assigned to the *metrics* namespace only you can only view, create and operate connectors that source or sink on these topics.

If you were try creating a new connector consuming from crm_customers, the Lenses security model would identify that you are not entitled to that topic and prevent the connector from being deployed.

Good management of identities is a security 101.

This ensures strong use (and rotation) of passwords, two-factor authentication and provides security teams with a centralized real-time monitoring of authentication event logs.

Chances are your organization has standardized on an Access Management & SSO solution and requires all business applications to integrate with it.

For Lenses, the same RBAC model mentioned above can authenticate users through your SSO provider including support for Azure, OneLogin, Okta and Keycloak.

Access audits are logged and can be integrated with your real-time security investigation & user behavior monitoring tools such as Splunk.

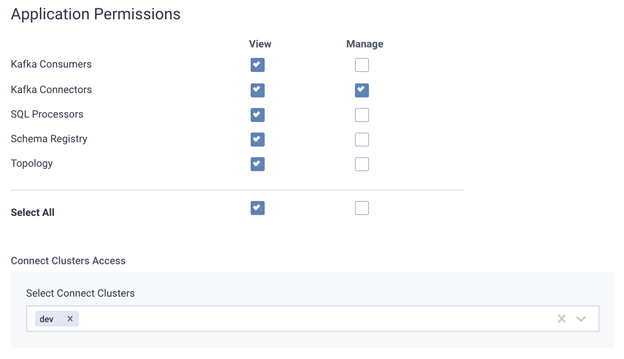

If you’re managing multiple Connect clusters, there will be times when you should assign privileges to the clusters, not just the connectors or Kafka resources they access.

Let's say you can deploy the Elasticsearch connector for topics dev* but only on certain dev* clusters (as opposed to in production).

Neither Kafka Connect nor open source tooling support managing privileges across different Kafka Connect clusters.

With Lenses, we’ve recently extended the security model and introduced Kafka Connect namespaces. In the same framework that manages access to data and connectors now comes the ability to define how they are accessed, and what or who accesses them.

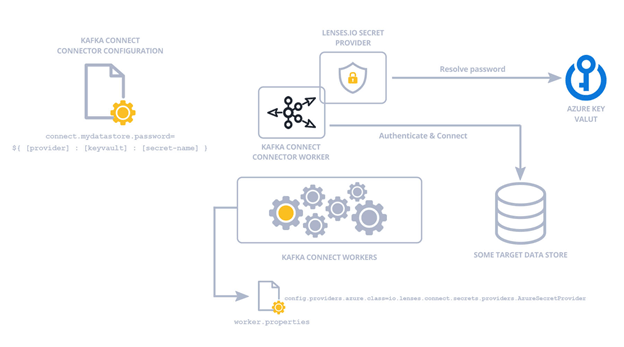

Avoiding secrets being exposed in code & configuration can be especially tricky, and unless you use a Secret Provider plugin it will expose sensitive configurations via Kafka Connect's APIs.

Lenses allows you to delegate lookup of secrets, at runtime, to external secret providers such as Hashicorp Vault, AWS Secrets Manager and Azure KeyVault using a plugin. These are open source, Apache 2.0 licensed. Lenses can also act as a secret provider to reduce your tech stack further.

Encrypting and anonymizing data streams is a common and complex challenge with Kafka and often businesses need to re-architect their applications to accommodate for this.

There are plenty of arguments for encrypting or hashing data in transit or at source, but this may be a compliance requirement you need to fulfil in any case.

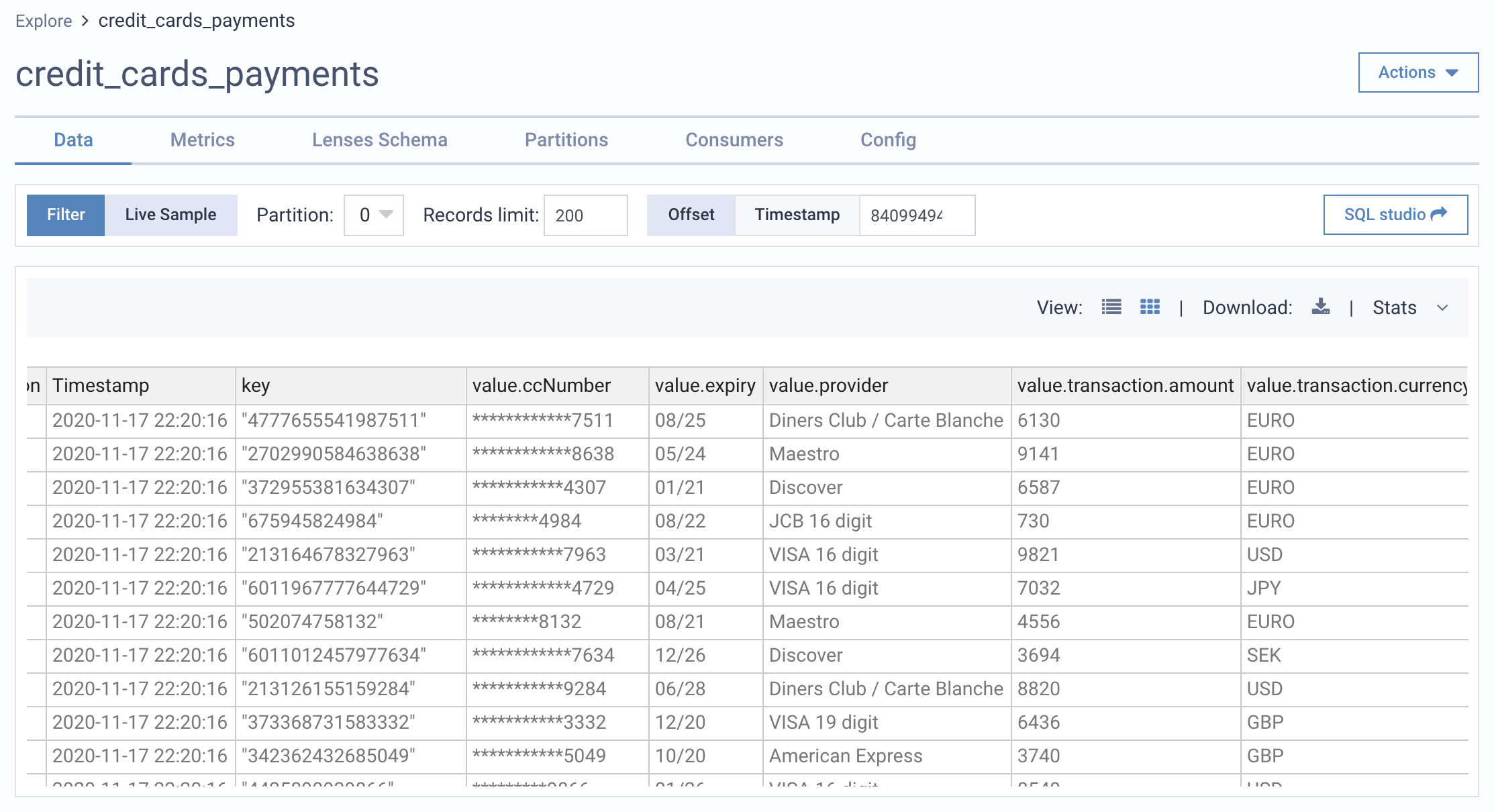

There are also times when data does not need to be encrypted or anonymized on the wire but certain fields should be masked for non-privileged users (such as IT Operators, developers etc.) This is particularly true for investigation or exploration of data.

Data Policies allow fields across datasets to be masked based on defined rules. This does so without affecting the integrity of the data streams.

Whatever controls are put in place, you should still expect and anticipate audit reports. You may be asked to report on which teams and users are processing certain datasets for example. Data catalogs provide a great way of discovering datasets that exist across an environment.

If using Lenses, the Data Catalog intelligently discovers metadata in real-time generated by your applications, making those audits much faster.

The combination of data masking and Data Policies also chip in to help during audits. The same rules that mask data can also detect and list those applications processing the relevant data.

Not forgetting a Topology view which helps to address data lineage and data provenance questions.

Getting production sign-off for critical projects may require having real-time security monitoring in place.

You can expect security operations teams to request audit logs for user behavior monitoring which can detect abnormal activity and insider threats.

OS level audit logs from your Kafka Connect infrastructure are a big requirement to cover and there are plenty of well-documented security logging solutions for this.

For application-level audits of Kafka Connect, however, things can prove more difficult.

With Lenses, we've made sure data operations leave an audit trail. Actions such as restarting a connector or changing configuration.

Audits can then be fired off into a SIEM or User Behavior solution with native integration into Splunk, or by using a webhook for other solutions.

Many think of governance for Kafka Connect too late.

Getting on the front foot will put you in the good books with security and compliance. It will also avoid an audit that puts you in the eye of a massive storm. Explore deploying connectors through Lenses in an all-in-one Kafka & Kafka Connect environment available as a Docker Container or as a Cloud sandbox.