Andrea Fiore

Increase compliance with Kafka audits

The latest Lenses Kafka audits log how, when and to who has accessed data in Kafka Topics.

Andrea Fiore

Suppose that you work for a government tax agency. You recently noticed that some tax fraud incident records have been leaked on the darknet. This information is held in a Kafka Topic.

The incident response team wants to know who has accessed this data over the last six months. You panic.

It is a common requirement for business applications to maintain some form of audit log, i.e. a persistent trail of all the changes to the application’s data to respond to this kind of situation. But for Kafka in particular, this can prove challenging:

How can you tell who the Topic has been exposed to in your organization?

Can you verify whether it has been manually removed by a rogue actor?

Can your SRE or incidence response team easily conduct a post-mortem analysis following an outage, or data loss, to pinpoint when a fatal Topic misconfiguration happened, and who caused it?

We’re continuously building Kafka audit tools into Lenses, to help developers and security teams in the scenarios listed above.

Audit logs provide a simple and effective way to track relevant activity around your business data.

This is useful when it comes to meeting compliance and reducing risk, including regulations such as GDPR, CCPA and HIPAA.

In other cases, it’s difficult for organizations to know which datasets employees should be authorized to access. In response they apply a policy whereby employees are trusted not to query certain data that is not relevant, with continuous audits and insider threat monitoring happening to ensure they haven’t breached trust.

But even in a ‘business-as-usual’ scenario, Lenses Kafka audit logs increase the value of business data by providing contextual information and making different types of data professionals (e.g. engineers, analysts, DevOps) aware of each other's interactions with the platform and the data itself.

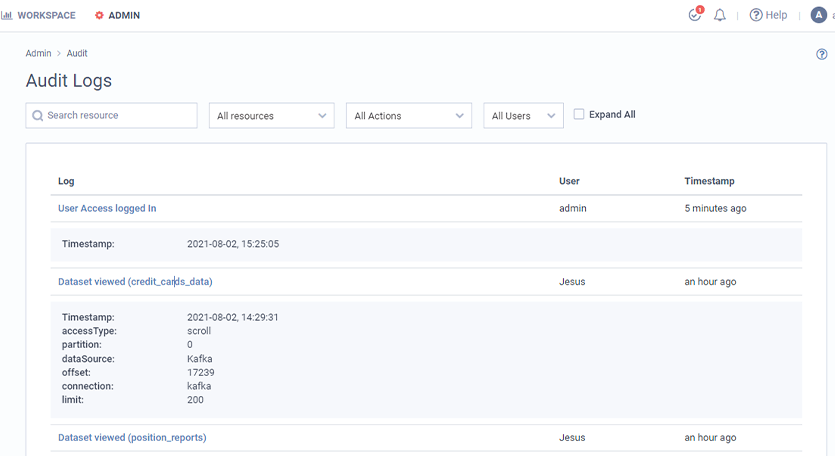

Whenever the Lenses API is interacted with or external resources are modified, corresponding audit entries persist in the Lenses database.

New Kafka audits are appended to the log whenever Kafka Topics, Schema Registry subjects, or Lenses groups, users and policies are created, updated or deleted.

This searchable trace of all relevant resources helps our customers meet pressing security, compliance and accountability requirements across their data platform.

Lenses now provides a Kafka audit trail for read-only data access activity as well as API actions that directly modify resources.

For all supported data connections (i.e. currently Kafka, Postgres or Elasticsearch), Lenses now audits the API calls whereby users scroll through records from the dataset detail view as well as any custom SELECT queries run via the Lenses SQL studio.

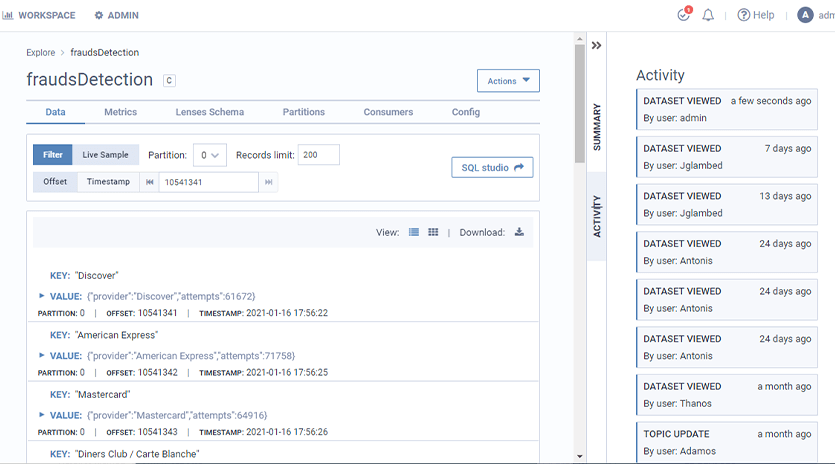

In this release we have also brought audits much closer to the data itself: alongside other relevant audit entry types, data access audits are now directly visible from a dedicated tab in the right hand sidebar of the topic/dataset view.

While extending the scope of Lenses Kafka audits, we strive to gracefully balance several, at times conflicting, requirements. I will now expand on these requirements and highlight the relevant configuration options Lenses provides to tweak access audits according to your specific need.

By default, Lenses 4.3 logs audits for all data access activity. This will help our users discover the new feature and take advantage of it. However, should you not feel comfortable with this level of auditing, opting out is as easy as setting a configuration flag to false:

lenses.audit.data.access = false

Tip: remember, if you deploy Lenses in docker containers, any config parameter can be set as an environment variable by uppercasing its name and replacing dots with underscore: e.g.

`LENSES_AUDIT_DATA_ACCESS=true`

In order to avoid overloading its database with an unbound amount of audit records produced by frequent reads, Lenses will periodically trim data access log entries in excess of a given threshold (defaulting to 500K records). This threshold is configurable through the `lenses.audit.data.max.records` key, and might be increased or decreased depending on your retention requirements and database capabilities.

As for the clean-up interval, which defaults to 300000 milliseconds (i.e. five minutes), this might be tweaked by setting `lenses.interval.audit.data.cleanup` to a different interval (expressed in milliseconds).

Alternatively, should you want to kept data access audits indefinitely, you might simply disable the periodical clean-up task by setting the following:

lenses.audit.data.max.records = -1

Lenses audit logs do offer some basic filtering capabilities. These should be all you need in order to answer common operational questions such as:

What users/service accounts are running SQL queries to access records from topic X?

When was the schema Y updated last?

And by whom?

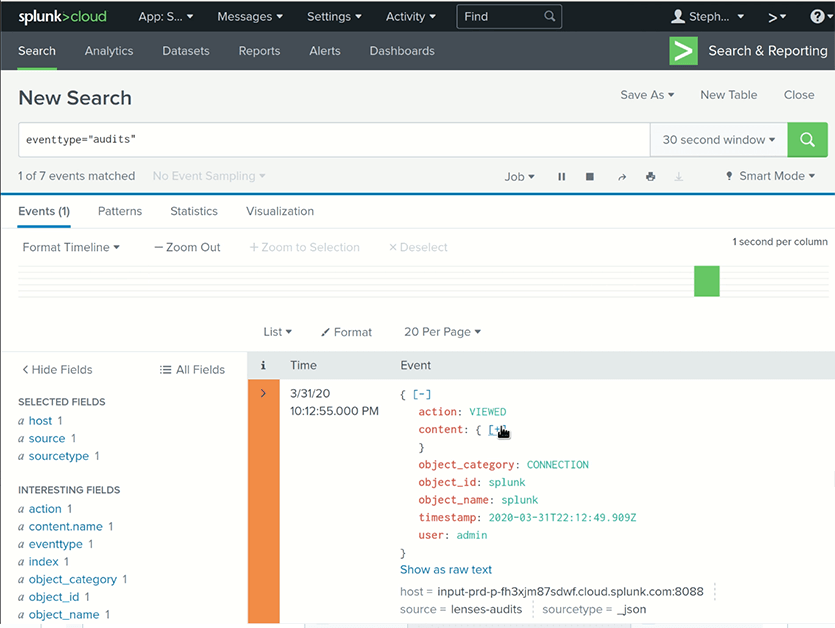

We provide a fairly flexible escape hatch for more advanced scenarios such as filtering by a given time window, or matching by a key/value pair that is specific to a given audit entry: enter audit channels.

Through this feature, Lenses can establish a secure connection to Splunk or any other data system that exposes an HTTP API. Once the channel connection is configured, Lenses will automatically sink all its audit log entries in the target system, thus allowing you to run queries directly in your system of choice.

As an incremental improvement over an already established capability of our product, we see data access audits for Kafka as an important stepping stone towards a richer data catalog experience in Lenses.

While we hope this new feature will help you work more effectively with your data. We are looking forward to your feedback, suggestions and comments on how Kafka audit monitoring might help increase awareness, transparency and collaboration in your organization.

Ready to get started with Lenses?

Download free