Migrate self-managed Kafka to HDInsight via GitOps

Benefits & example of adopting GitOps approach to migrating self-managed Kafka to HDInsight

Our mission is to enable organizations to focus what really matters, their business, their IP, their data.

Cloud providers, like Azure, provide ready-made, managed services that let the business concentrate on generating business value. They give you a range of solutions like data stores, key vaults, container services such as Kubernetes and streaming platforms such as Apache Kafka in the form of HDInsight.

But what happens if I’m not in the cloud? How do I transition so that my organization can benefit from the offerings available to build a complete data platform in Azure?

I have previously talked about how Lenses embraces GitOps and the benefits it provides:

Deployment of flows in a repeatable fashion

Promotion between environments

Standardised and familiar workflows across engineering, ops, …

Meet governance — everything audited & version controlled

Drive automation to accelerate delivery

These benefits apply regardless if you are promoting flows from a development environment to production, from one managed service to HDInsight or from Self-Managed to HDInsight. As far Lenses is concerned, it’s an Apache Kafka cluster, a commodity to be consumed and used to facilitate a business goal.

Let’s dig deeper with an example. I have a Self-Managed Kafka cluster and I want to migrate to HDInsight Kafka.

First, we will concentrate on topics. I may have 1000’s of topics. How do I ensure that the configuration (the metadata) are migrated efficiently?

I could do this manually, but this is error-prone, time-consuming and importantly also lacks governance and auditing. A better approach would be to automate this, which is what we can achieve with Lenses and a GitOps approach.

Lenses has a UI, but this is a just another client of the APIs. Every action is also available via the lenses-cli and all actions are audited and bound by role-based security.

This command-line tool has the ability to export and import all resources, either selectively or in bulk, as YAML or JSON files. These files can then be version controlled and promoted through a standard CI/CD pipeline.

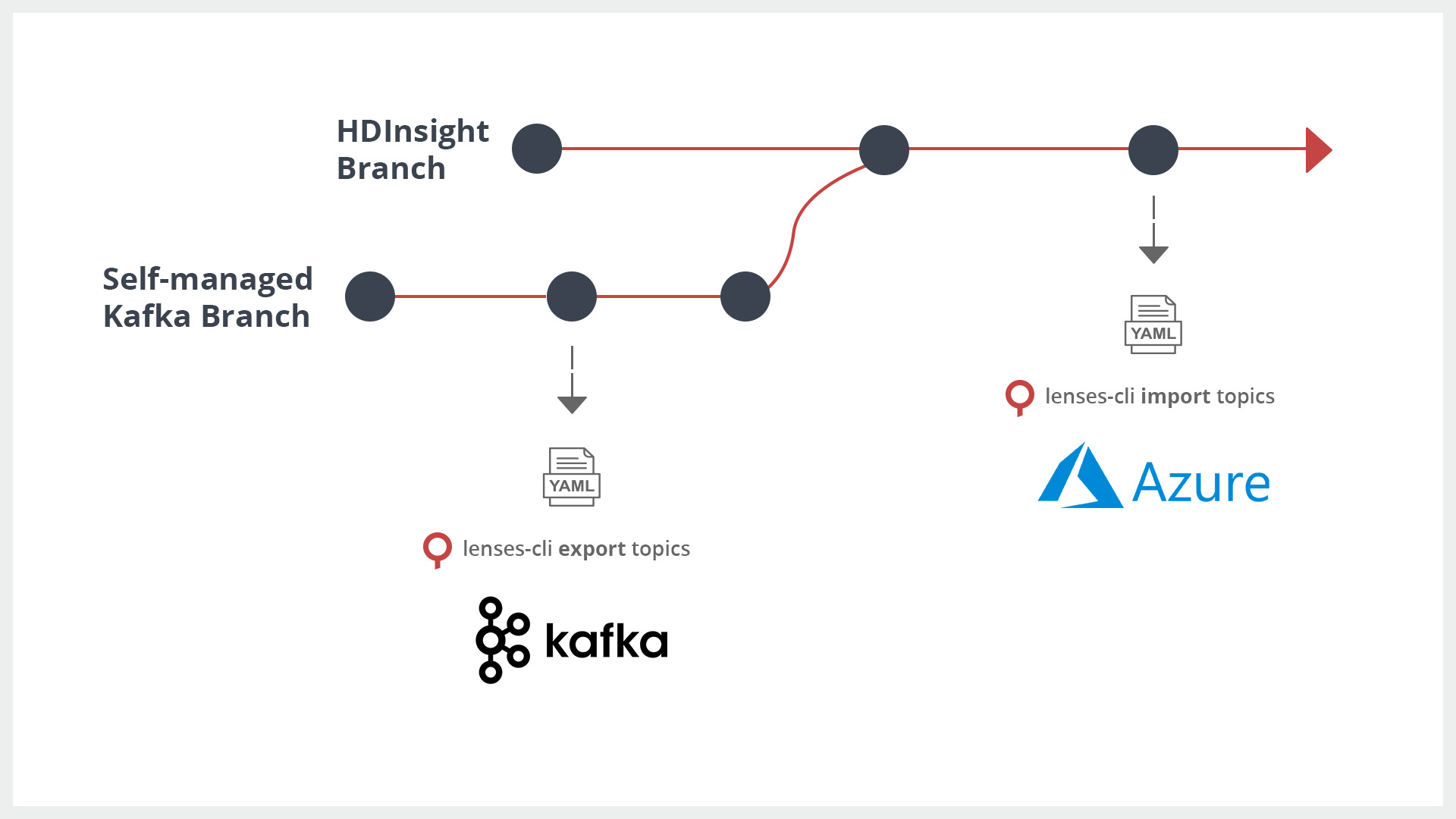

Let’s say you have a GitHub repo linked to an Azure DevOps pipeline. You have a branch called Self-Managed which represents the current state for topics on my self-managed legacy cluster. We will create a new branch called HDInsight as the desired state of my new HDInsight Kafka cluster.

I want to sync my topics definitions from my Self-Managed cluster to HDInsight. The flow is simple:

Check out the Self-Managed branch

Run the lenses-cli export topics command, configured against the Lenses instance managing my Self-Managed cluster. This would either bootstrap this branch with all topics that currently exist in the Self-Managed cluster or produce a delta, adding new topics or updating with new topic configuration details

Commit and push the outputted files that are now on the Self-Managed branch

Create a Pull Request on the HDInsight branch

At this point, I now have the current state in Git for my Self-Managed cluster. I have also started creating my audit trail via the pull request. Organizations can then follow an approval process according to their own policies.

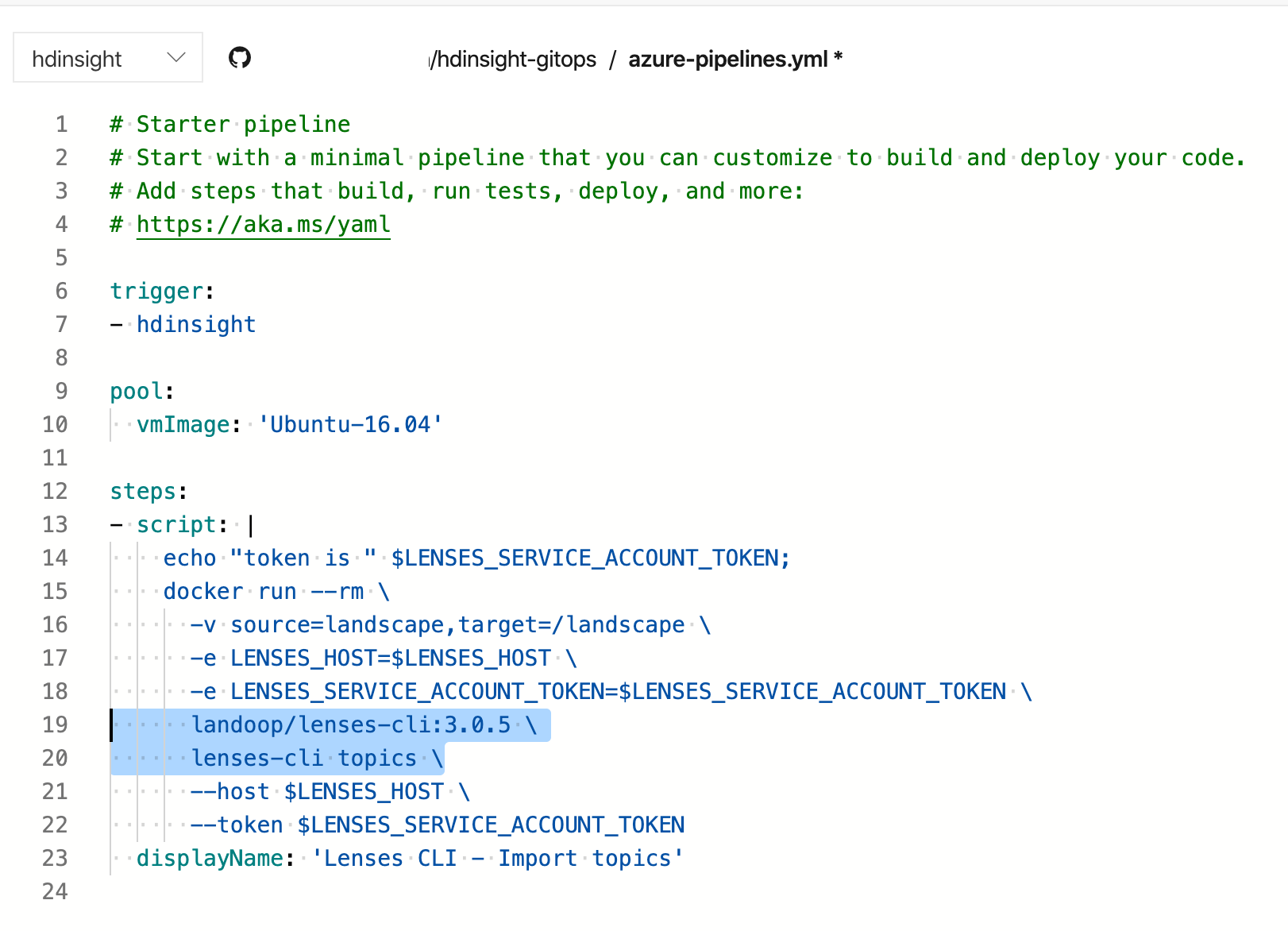

Once this Pull Request is approved, we can easily apply this desired state. An Azure DevOps pipeline can run to create the topics. The step in this job is:

lenses-cli import topics

That’s it.

We use the lenses-cli, configured to point to our Lenses instances managing our HDInsight cluster, and Lenses does the rest. It will create the topics defined in the HDInsight branch and also create an audit entry.

The same approach can also be used for all resources managed by Lenses, not only topics:

Quotas

ACLs

Alerts

SQL Processors

Kafka Connectors

Data policies

For example:

This allows me to move my entire landscape from one environment to another using Git as a source of truth.

Repeatable promotion of data flows into production faster.

If I want to actually sync data between the two environments, there’s plenty of connectors available for this, which Lenses can manage.

Lenses supports service accounts so you can create and revoke tokens to keep your pipeline secure. Lock-down each service account using group access and namespaces to restrict what the lenses-cli can and cannot do, providing multi-tenancy. Each team could have their own pipelines to migrate and control specific topics and applications.

Using a GitOps approach, Lenses allows easy migration from Self-Managed Kafka clusters to HDInsight, in an automated, secure, governed and audited way, so organizations can focus on what’s important, their data.

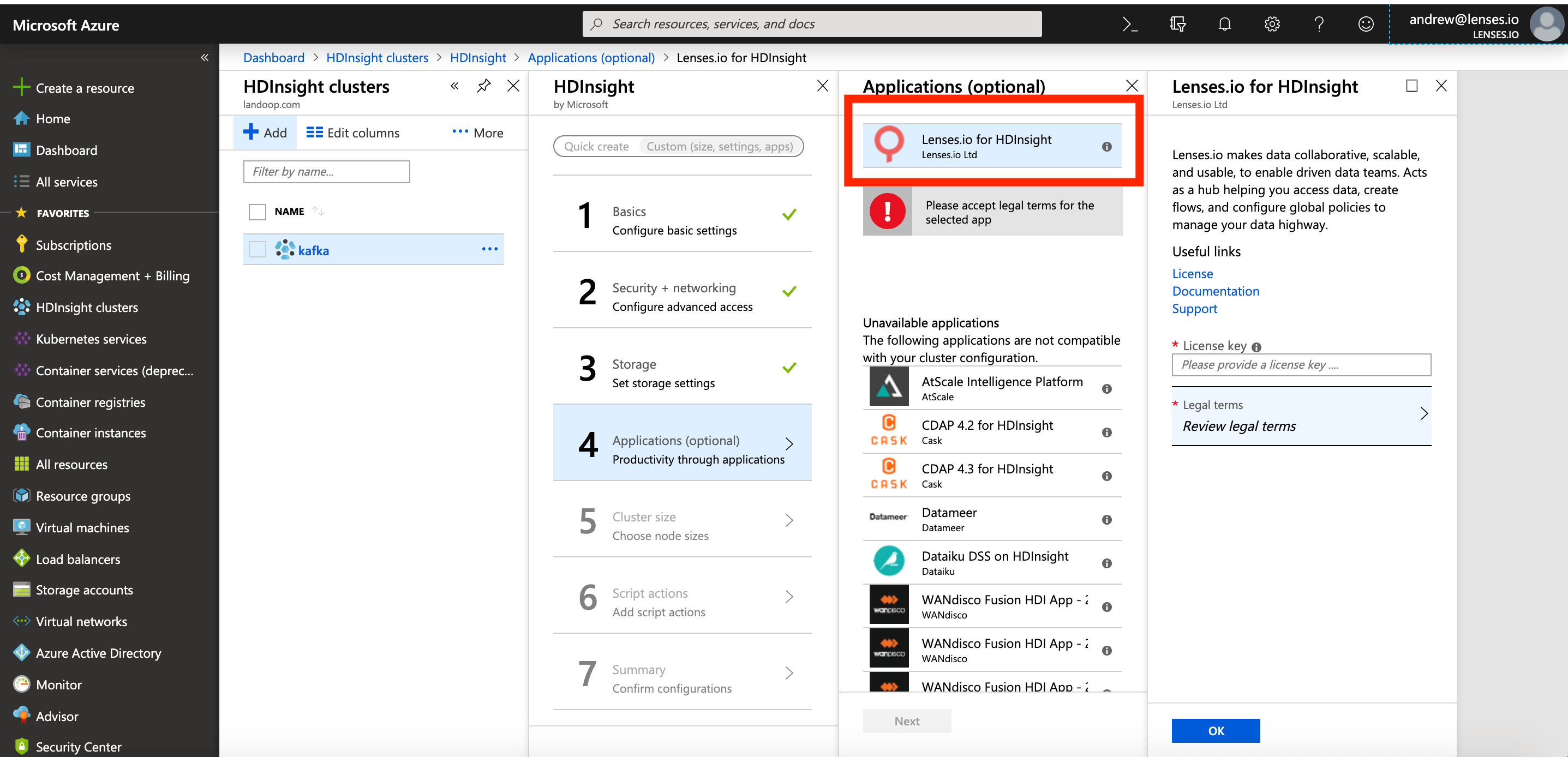

Try this out for yourself with the free Lenses all-in-one Lenses+Kafka box available to docker pull or connect Lenses to your Azure HDInsight Kafka cluster here