Connect Kafka to InfluxDB

Simplify and speed up your Kafka to InfluxDB sink with a Kafka compatible connector via Lenses UI/ CLI, Native plugin or Helm charts for Kubernetes deployments

About the Kafka to InfluxDB connector

License Apache 2.0

The Influx Sink allows you to write data from Kafka to InfluxDB. The connector takes the value from the Kafka Connect SinkRecords and inserts a new entry to InfluxDB. This is an open-source project and so isn't available with Lenses support SLAs.

Connector options for Kafka to InfluxDB sink

Docker to test the connector

Test in our pre-configured Lenses & Kafka sandbox packed with connectors

Use Lenses with your Kafka

Manage the connector in Lenses against your Kafka and data.

Or Kafka to InfluxDB GitHub Connector

Download the connector the usual way from GitHub

Connector benefits

- Flexible Deployment

- Powerful Integration Syntax

- Monitoring & Alerting

- Integrate with your GitOps

Why use Lenses.io to connect Kafka to InfluxDB?

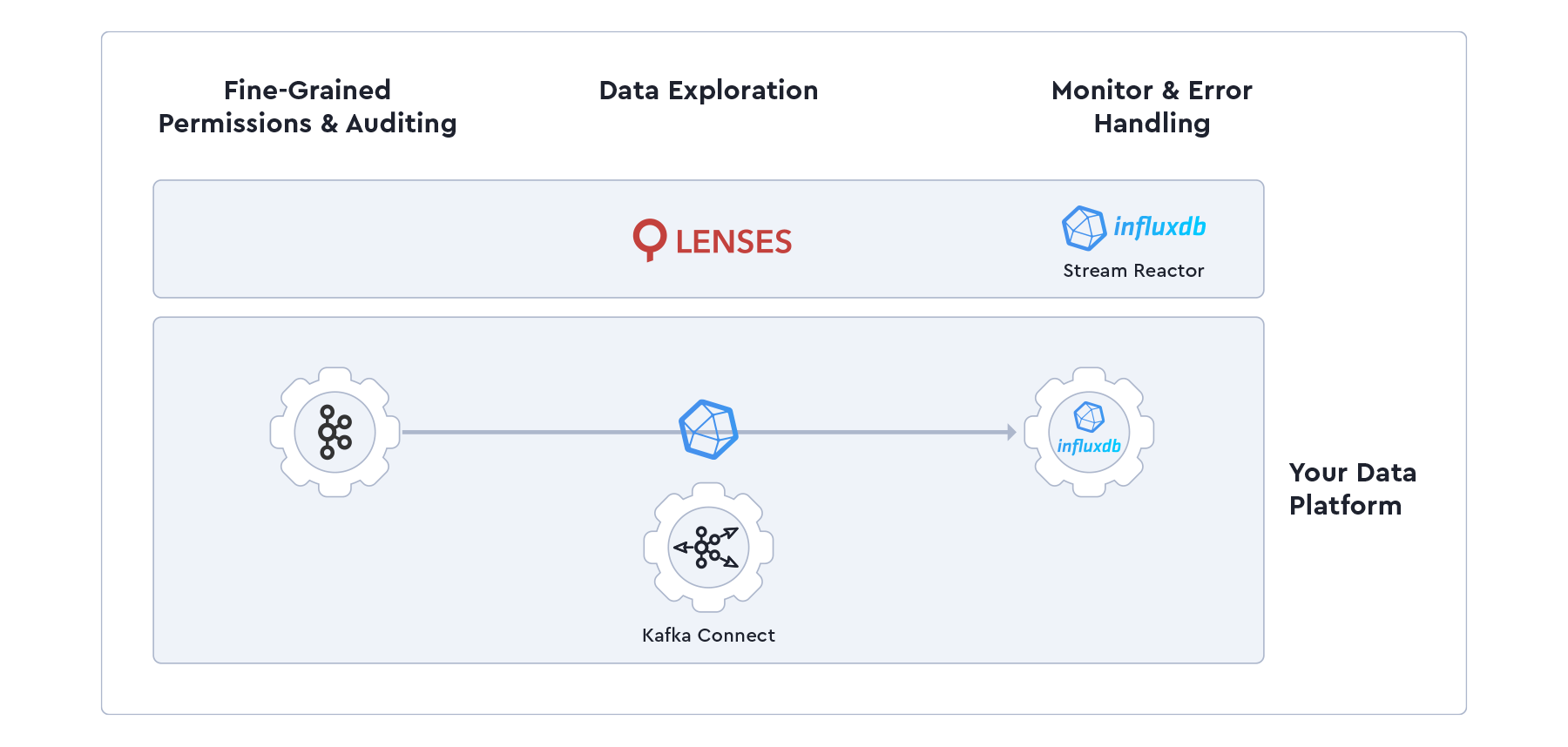

This connector saves you from learning terminal commands and endless back-and-forths sinking from Kafka to InfluxDB by managing the InfluxDB stream reactor connector (and all your other connectors on your Kafka Connect Cluster) through Lenses.io, which lets you freely monitor, process and deploy data with the following features:

- Error handling

- Fine-grained permissions

- Data observability

How to push data from Kafka to InfluxDB

InfluxDB allows the client API to provide a set of tags (key-value) to each point added. The current connector version allows you to provide them via the KCQL.

- Launch the stack

- Prepare the target system

- Start the connector