Antonios Chalkiopoulos

Real Time Streaming Data Policies

Identify and Protect your sensitive data in real time

Antonios Chalkiopoulos

Data protection of sensitive or classified data is not a new problem. In this blog post, you are going to be introduced to Continuous Data Policies that can protect sensitive and classified data at a field level on your streaming Data Platform on Apache Kafka.

A Data policy is a simple but powerful idea that usually resides on your DataOps layer. Let us look into an example

All we need now is a system, that can continuously

Track all (streaming) data that contain social security numbers

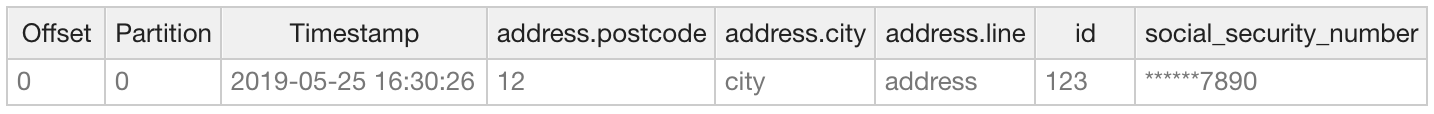

Track all applications and actors who are consuming or producing such data

As a Data Officer, you now have visibility and clarity on messages containing social security numbers. Not only that but you can also identify applications and actors and see how and why they access such data. “I understand security, encryption, SSL etc, but can I not just protect/shield my data, by introducing to the Data Policy, a simple flag ?” one might ask

protection: “last-4”

Now let us consider how Continuous Learning can be the essential ingredient in realizing the above vision. Our product Lenses has the principal of continuous learning since it’s inception and learns about the underlying data over any data format JSON, AVRO, XML even ProtoBuf and other serdes.

When a new topic gets created, and messages arrive in any format that contain "social_security_number", then

immediately it is identified, usage is tracked and also data are protected. And all of that continuously and fully autonomously.

Learning about the data is only the first dimension. Intelligence builds up when you know who is using it and how, where data originates and how it flows into target systems. Highlighting to everyone the entire application and business logic for all data is the holy grail.

Effectively your modern Data Catalog now contains an always up to date metastore and data policies. From an architecture perspective where you typically manage between 20 and 40 technologies, you would ideally build your data-policies just once, in a future proof way and have it applicable <b>over multiple technologies</b>.

That is why Continuous Data Policies and Continuous Learning live in the DataOps layer. The definition of DataOps is the practice of embracing collaboration and bringing together different actors to data delivery. “Organizations focusing on DataOps are going to be leading on what those best practices are“.

Data Officer Head of Operations Head of Engineering Data Engineer Data Science Architect Business Analyst QA Head of Security Head of Data Platform (everyone benefits with DataOps best practices)

Let us look into how the simple idea of automatically applied Continuous Data Policies benefits the entire organization and brings together actors and opens collaboration.

The Data Officer can now gain insights in all data and implement the organization global Data Policies. Financial, medical, personal and classified data can now easily be tracked and protected across the entire DataOps platform in an automated way. Actionable reports and information about data and application landscape.

The Head of Operations can take the Data Policies from the Data Officer and build up a policy.yaml file. A simple command away on his terminal he can update the policies to one or more environments in an automated fashion.

$ lenses policy set STAGING policy.yaml

He can now easily understand how secure or sensitive data are used in the organization and share info with the team.

The Head of Engineering has now observability over data and application logic. All micro-services, kstream apps, spark or python, java, scala and so on, DataOps embraces all technologies equally, so that the team can focus on delivering business value.

The Data Engineer can have a Data Platform, where access to production data is available while respecting the sensitivity of that data. A last-4 protection policy would result into

Apart from applying Data Ethics, the data engineer can easily track all the application logic that supports the business.

The Data Scientist can also be an active actor of the DataOps team. If access has been granted, he can access production level data safely and for example, feed his ML or AI via taping securely into real-time data, or measure the performance of an A/B test or a marketing campaign while tuning them up.

The architect has now layers of his platform implemented with best practices and in a future proof way. He can focus on bringing in more best practices and reinforcing the DataOps principles of collaboration.

People who understand the data are one of the most valuable asset to an organization. As sensitive data is secured, everyone on the business side can be granted access to real-time feeds.

QA teams are enabled to thoroughly test applications and data, and identify and report any discrepancies. Imagine seeing a JSON or AVRO message on a stream ``social_security_num". He can highlight that the Data Policies are set to match only “social_security_number” so the team can either conform or evolve the data policies.

Head of security can inspect, understand and observe all data and apps and track classified data across all actors. He can set permissions to grant access to data and operations to his team.

The Head of the Data Platform enjoys not only the benefits of Continuous Data Policies but of DataOps principles. The team is highly collaborative and can deliver data products with confidence. The Data Platform can now open to even more teams and business units to collaborate.

“Our streaming Data Platform on Apache Kafka have messages with sensitive information. Transparently identifying where such data exist and how they are used, and also protecting sensitive data at a field level, for us was a requirement to cover GDPR regulations. Lenses additionally increased discussions around Data Ethics and DataOps in our center for excellence.“

SWEDISH PUBLIC EMPLOYMENT SERVICES

This was a short intro blog on CDP and DataOps. In Governance, Retail, HealthCare, Insurance, Betting, Media, Financial companies, Continuous Data Policies are finding increased usage. For additional information or to talk with Kafka experts