Marios Andreopoulos

A perfect environment to learn & develop on Apache Kafka

... And 5 use cases to get started

Marios Andreopoulos

Apache Kafka has gained traction as one of the most widely adopted technologies for building streaming applications - but introducing it (and scaling it) into your business can be a struggle.

The problem isn’t with Kafka itself so much as the different components you need to learn and different tools required to operate it.

For those motivated enough, you can invest money, effort and long Friday nights into learning, fixing and streamlining Kafka - and you’ll get there.

But for those that prefer to spend time focusing on the data and would rather master Kafka’s value than its inner-workings, fear not.

When we started Lenses.io, we experienced first-hand how difficult it can be to set up a Kafka development environment; so we decided to follow an anti-pattern and create a docker image with a full-fledged Kafka installation: the fast-data-dev docker.

The community loved it and we are grateful for this.

Fast forward and we created Lenses.io, understanding that now the challenge was operating Kafka and real-time applications and data. So we wanted to create a tool to provide the best developer experience for Kafka: Lenses.

It is only natural that we had to add it to fast-data-dev and make it free for developers, the people to whom we owe our success.

Hence, enter the Lenses Box; our most popular download!

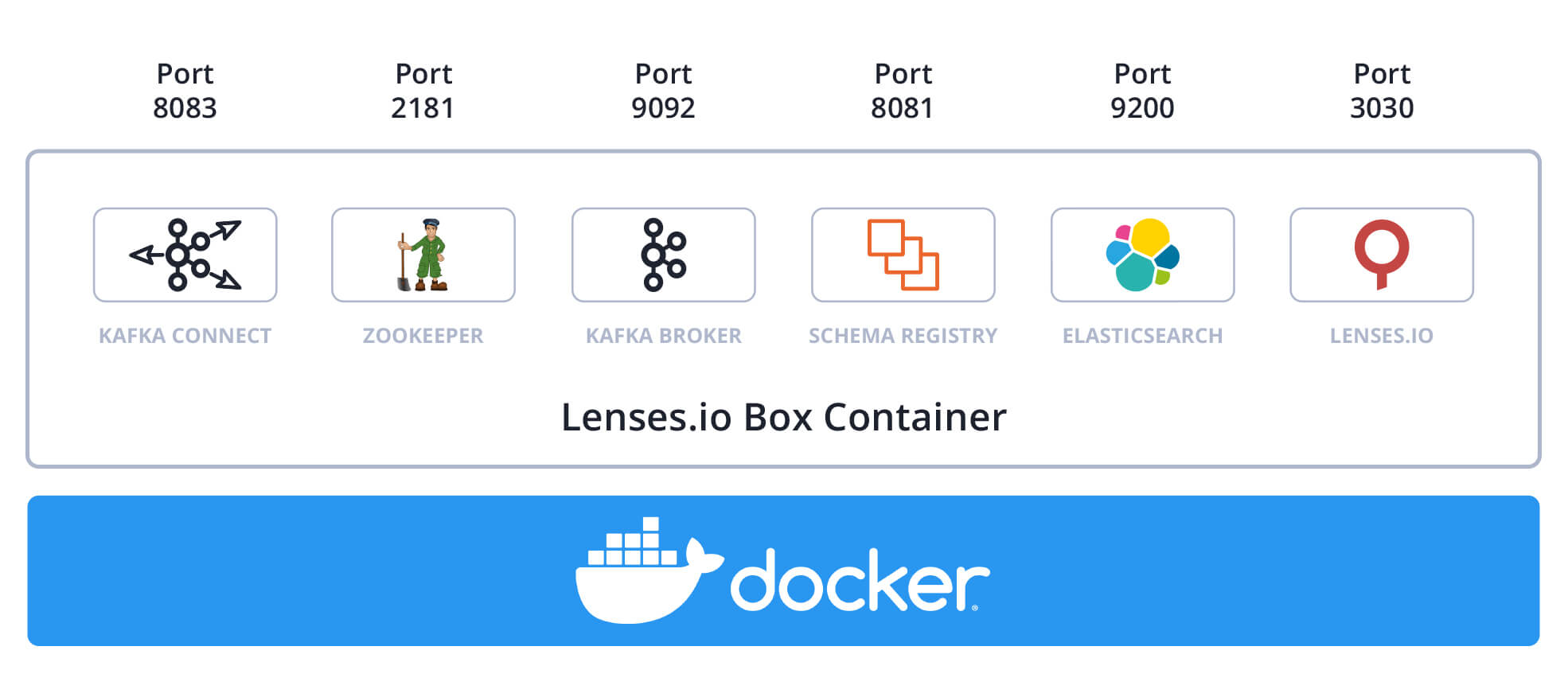

To start with, the Box gives you an environment including:

1-node Kafka and Zookeeper: your Kafka service, ready to receive data.

Kafka Connect: use it to bring data in and out of Kafka, from DBs for example.

Schema Registry: safely control the structure of your data in Kafka, powered by Avro.

Elasticsearch: ship your streaming results here for powerful search.

All in a single docker container.

That’s already quite a lot of time off your plate. Then we package....

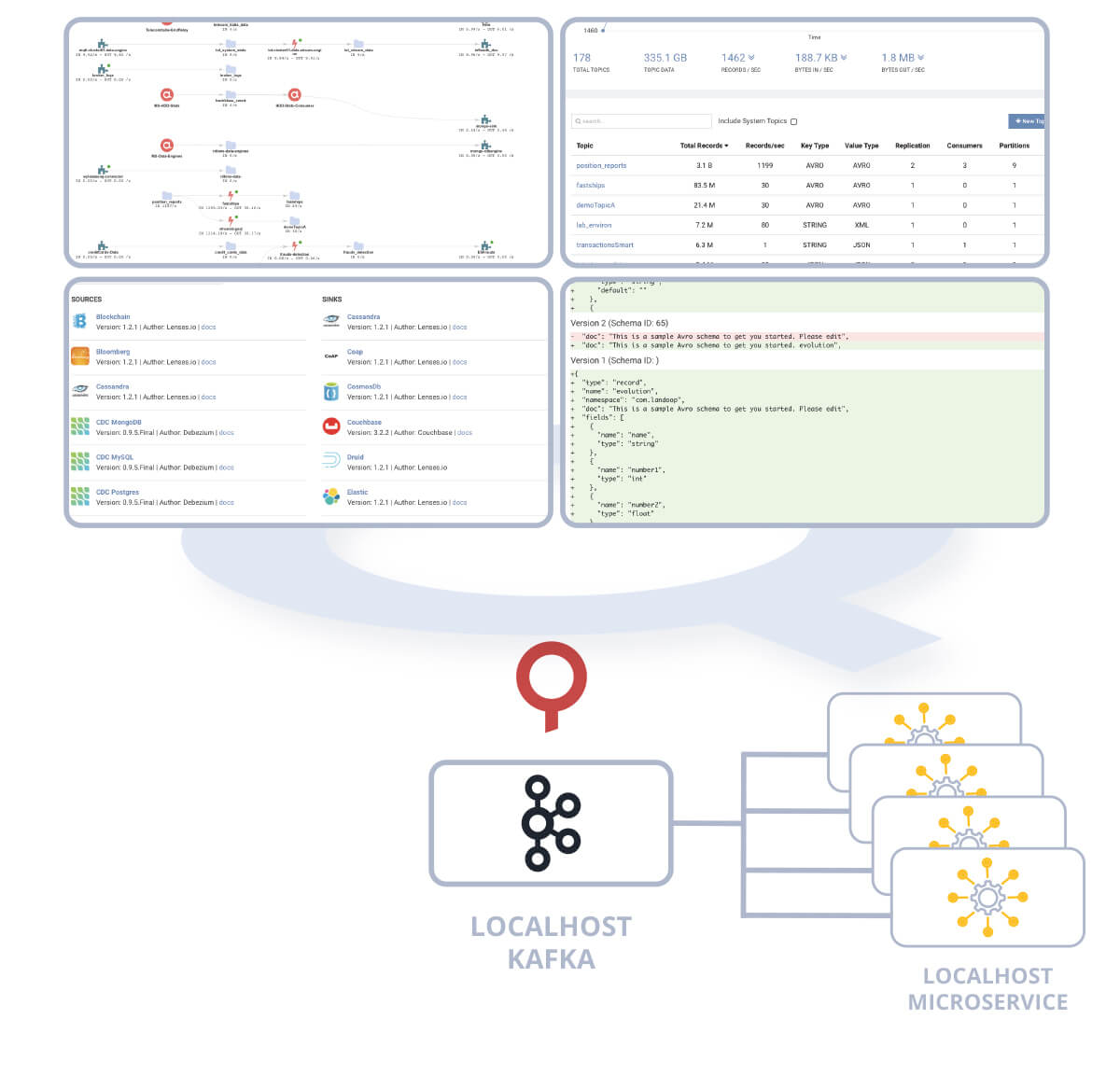

In the same container, you’ll get a Lenses.io workspace fully integrated with the Kafka environment.

If you’re not familiar with Lenses.io, it’s an engineering portal for building & operating real-time applications on Apache Kafka.

As a docker container that unifies all these different technologies into a single developer experience, Box is a perfect solution to learn Kafka or to develop real-time applications on a local instance before promoting to another Kafka environment.

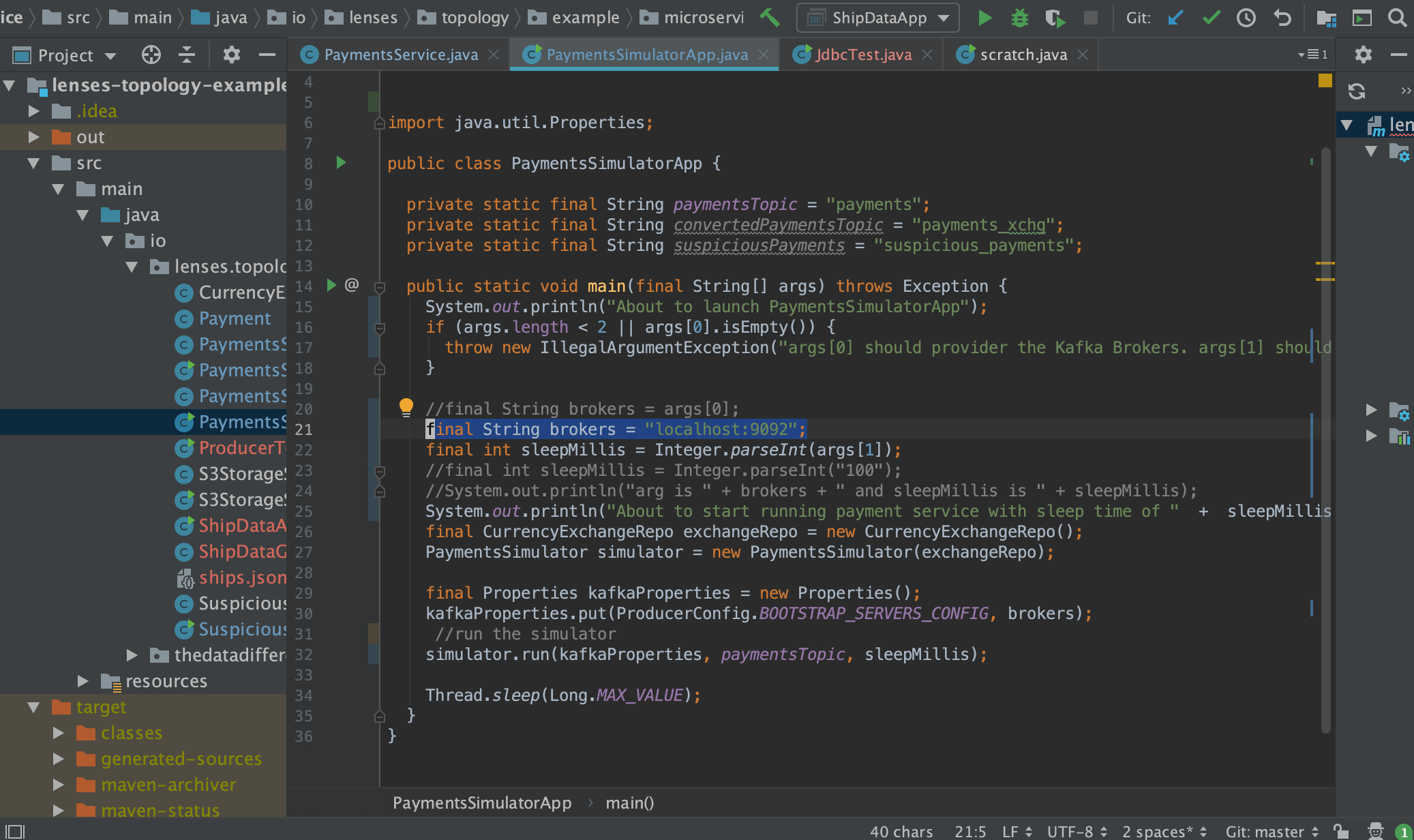

So, if you’re a developer, you can docker run Lenses.io Box on your machine and try out the following use cases.

Connect your custom application, written in whatever framework you choose, to the Kafka broker just like you would with any other Kafka environment. Once you’ve done this, you’ll have full visibility into your custom application within a workspace. This includes being able to explore data in the streams using SQL, viewing and alerting on lag. If you use the Topology client, you’ll be able to even see the full topology of your pipelines.

Your gateway into streaming structured data. With support for multiple standard serialization formats, custom formats, and Schema Registry, you can use Box to understand your data and experiment with schemas and evolution. Lenses SQL gives you a perfect playground to iterate and verify your schema changes.

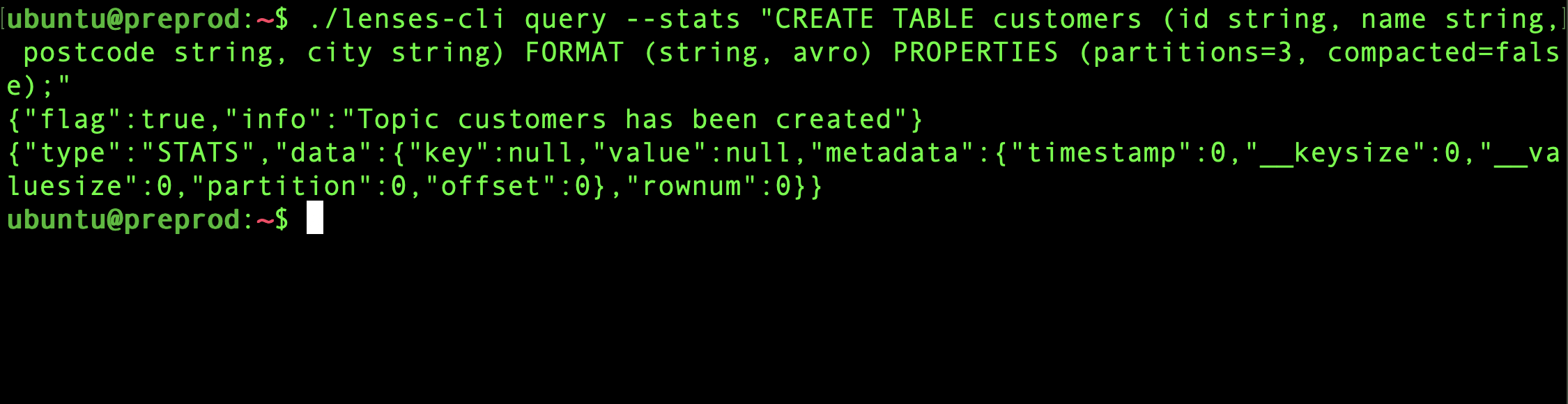

Do you like SQL? If so, use it to create schemas directly. For example the following statement will create a topic with an AVRO schema for the value of the topic.

You can run these statements from the browser or from a CLI

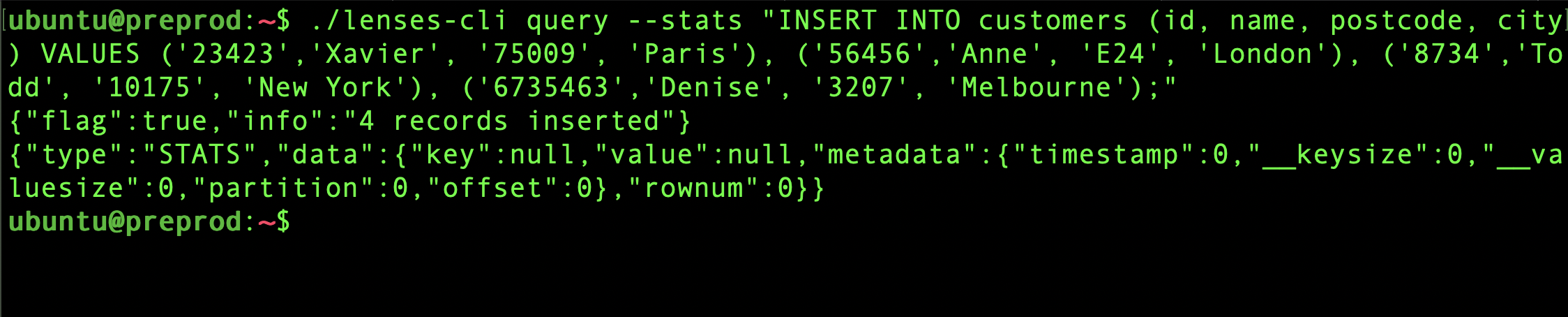

If you’re developing a streaming application that will be read from Kafka, you are effectively making a Kafka consumer. You will be connecting it to the Broker and you might want to test how it behaves when actually consuming data from a stream.

That same SQL Engine I mentioned earlier, also extends to injecting data into a topic. For example:

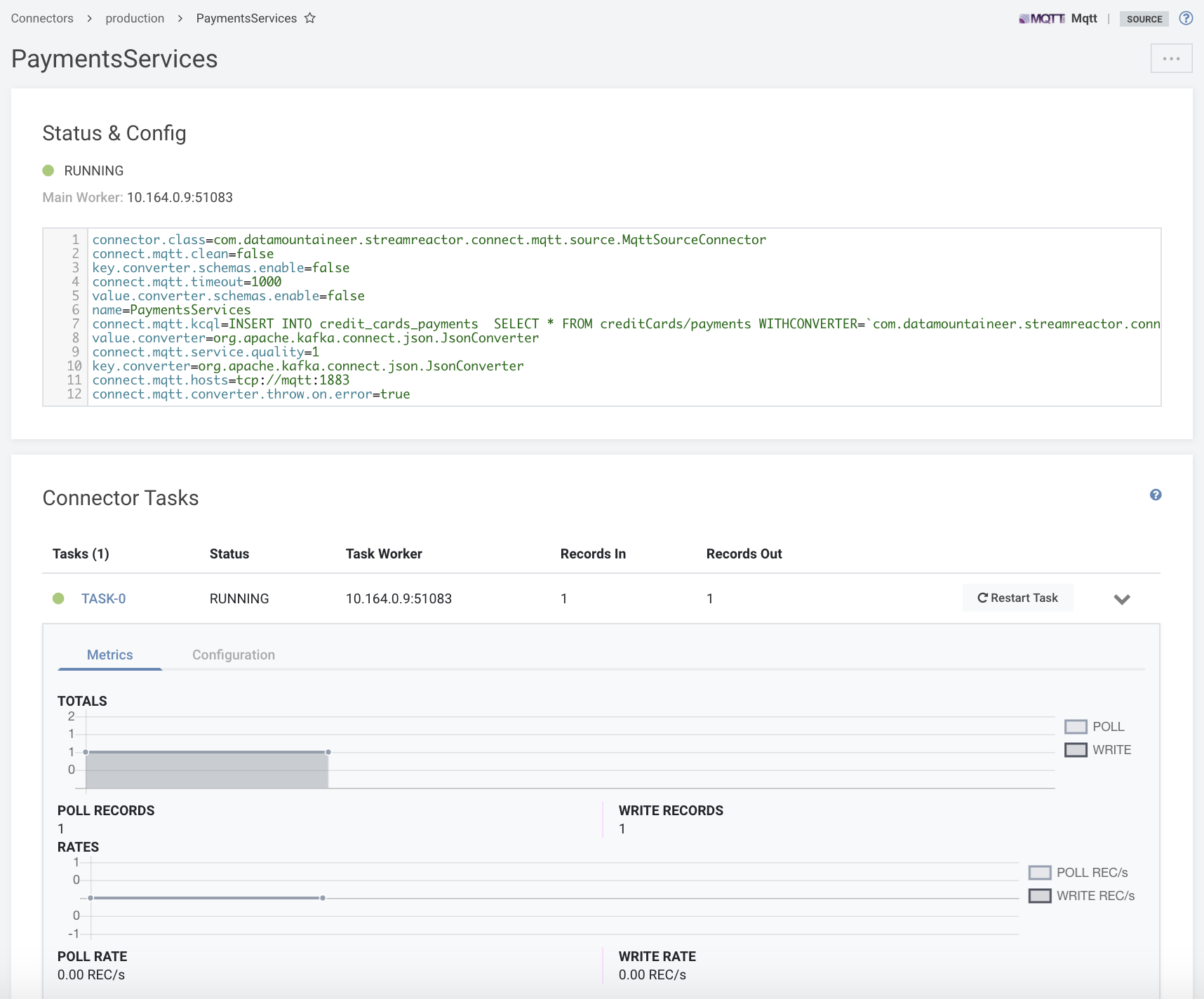

The easiest way to bring data in and out of Kafka is with the Kafka Connect connectors. They are standard patterns of moving data between Kafka and other data systems.

The environment is packaged with a number of popular connectors such as Mongo, Elastic 6, Influx but you can also import others.

Want to bring in data from a database? Want to ship your results to Influx? No problem. Just a few clicks and Lenses will get those connectors running for you.

This is perfect for testing out the Kafka Connect configuration and connection to those source and target systems. You benefit from the feedback loop: from error handling if you’ve mis-configured anything to seeing the performance of the flow. Not to mention being able to explore the data you are sourcing or sinking with SQL.

Last but not least, Box is an environment to build stream processing applications with SQL.

The Streaming SQL engine allows you to define real-time data processing applications that enrich, filter, aggregate or reshape data streams. Ask things like:

What’s my total number sales up-till-now? Real-time.

How many clients are on my website checking out my new product right now?

The answer is in the data. And all it takes is a bit of SQL. No frameworks, no code, no rocket-science engineering.

Here's an example:

You don’t need extra services either.. The workload will run locally in your environment for Box. Of course with the full Lenses.io workspace, you can choose to deploy it over your existing Kubernetes infrastructure and run it at scale.

As it’s next evolution, yes, Box is now available as a Cloud service! A full Lenses-Kafka developer environment with zero setup.

Sign up for free on our new Lenses.io Portal.

Or if you prefer download the Docker Kafka development environment on your laptop.