The developer experience gap in Kafka data replication

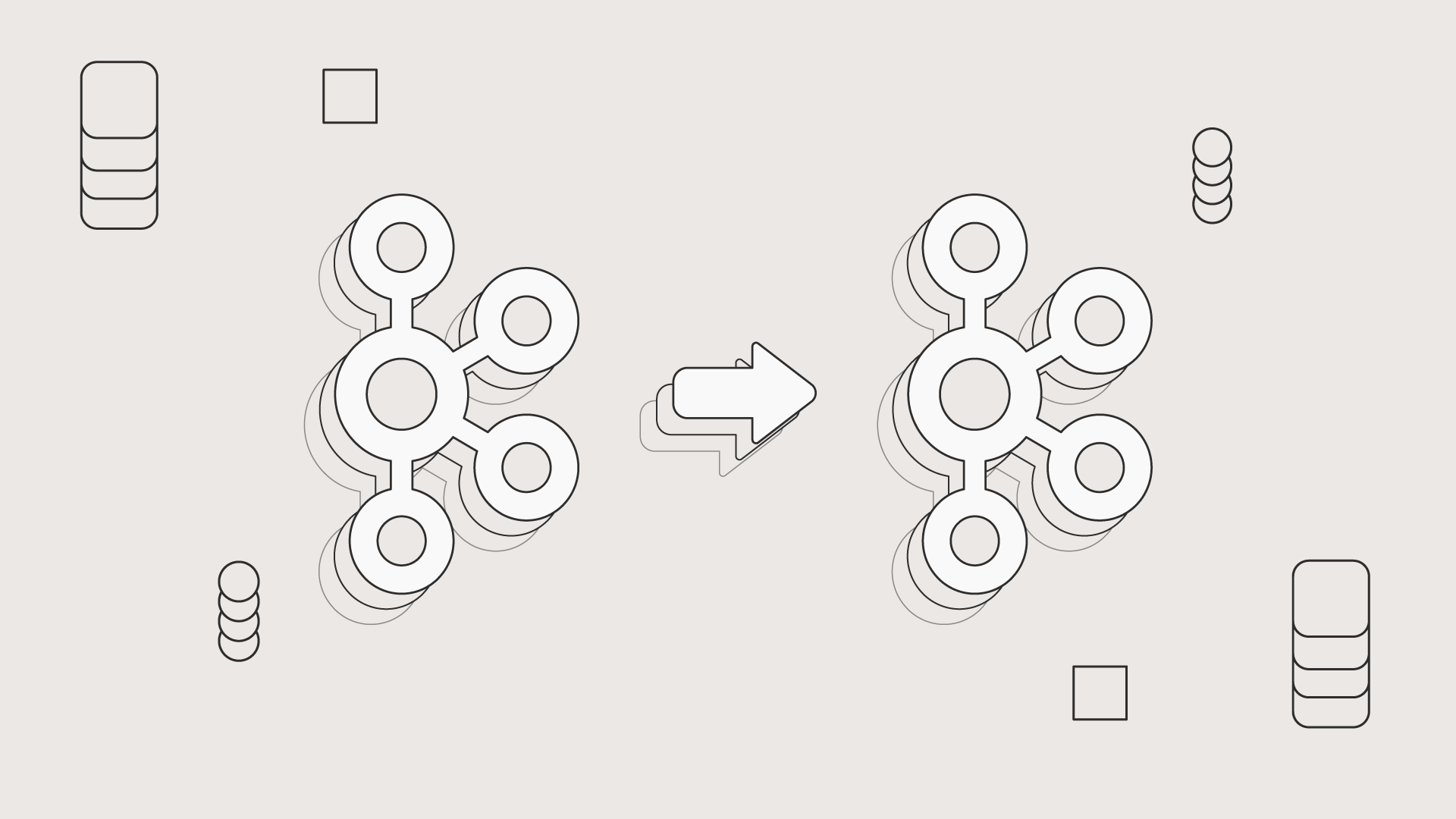

From weeks-long projects to self-service data distribution.

Read this blog to understand:

Why MirrorMaker2 and vendor-specific solutions for data replication create platform team bottlenecks

How Kubernetes-native data replication removes these friction points

What self-service data distribution looks like in practice.

An engineering team needs to test their fraud detection algorithm with production data in staging for 30 minutes. Simple request, right? Not when it requires a three-week project involving overworked platform teams, complex Kafka Connect configurations, and vendor-specific deployment pipelines.

This scenario plays out daily across organizations building real-time applications that need self-service Kafka data replication.

The streaming industry has lived with a lack of good replication options for too long. Every business building data-driven products needs an efficient supply chain for moving real-time data, yet current solutions create more friction than they solve.

The Kafka replication landscape offers limited choices, and none of them are particularly appealing for modern engineering teams.

MirrorMaker2 dominates because it's the only vendor-agnostic option, but it's showing its age. Built on the Kafka Connect framework, it inherits all of Connect's widely-acknowledged problems. Key committers have openly admitted they would redevelop it from scratch if they could. The project lacks active maintenance, missing features that modern workloads require, and evolving it often means upgrading the entire Connect framework – adding complexity instead of reducing it.

The operational reality is harsh: MM2 deployments come with significant compute costs and operational overhead. There's no built-in developer experience, no governance layer, and configuration requires specialized knowledge that most teams don't have.

Confluent's alternatives – Replicator and Cluster Linking – solve some technical problems but create strategic ones. Cluster Linking only works for exact replication between Confluent clusters, but it's designed for disaster recovery, not data sharing. You can't filter, transform, or route subsets of data. Most critically, it will lock you into Confluent.

Meanwhile, cloud-specific solutions like AWS MSK Replicator offer managed convenience but zero flexibility. Your target MSK cluster must be in the same AWS account, transformation capabilities don't exist, and you're locked into a single cloud provider's ecosystem.

Current replication solutions turn platform teams into bottlenecks instead of enablers. Replication has needed to be an infrastructure responsibility: it has required tight control and specialized skills. But the teams that need replication most are product and application developers.

Platform engineers spend time maintaining complex replication configurations instead of building capabilities that accelerate development teams. Every replication request becomes a project requiring specialized resources and lengthy approval processes.

Self-service becomes impossible when tools require deep streaming infrastructure expertise. Teams that should be iterating on algorithms and features instead wait for platform team availability.

Modern Kafka replication needs to match how modern applications are built and deployed. This means Kubernetes-native design, vendor neutrality, and developer-friendly interfaces.

Kubernetes-native architecture eliminates dependency on Kafka Connect and its associated complexity. Applications can scale naturally with cluster resources, integrate with existing CI/CD pipelines, and leverage standard Kubernetes security and monitoring patterns. The compute cost reduction compared to Connect-based solutions is substantial; particularly important when replication workloads can consume significant infrastructure resources.

Your core IT might use Confluent, but your Manufacturing teams use AWS MSK and Data Science uses Strimzi, for example. Infrastructure should enable this flexibility rather than constrain it through vendor lock-in.

Schema Registry handling presents a unique challenge when copying data between clusters with different registry vendors (e.g. the source is Confluent and target is AWS Glue Schema).

Smart data routing capabilities – topic renaming, merging, splitting – should be configuration, not custom development. Data filtering and masking become essential for compliance when sharing across environments or organizations. These should be declarative capabilities, not complex programming exercises.

From our standpoint, the solution combines enterprise-grade capabilities with consumer-grade ease of use.

Declarative configuration through YAML enables GitOps workflows that platform teams understand and trust. Changes flow through standard CI/CD processes.

Cost control - Highly efficient with auto-scaling based on data volume keeps compute costs predictable and efficient.

Control plane & governance - offers developer self-service deployment & control, backed by RBAC and auditing

Observability - provides visibility into replication status, performance metrics, and resource utilization. Everything integrates with existing observability stacks.

Advanced features - including data masking when replicating and dynamic data routing.

Most importantly, exactly-once delivery semantics ensure data integrity without requiring teams to understand the complexities of distributed systems guarantees.

Consumer offset translation enables seamless failover scenarios for disaster recovery use cases.

This approach transforms replication from a specialized infrastructure service into a standard developer capability. Teams provision replication resources as easily as they provision compute resources, with the same self-service interfaces and operational patterns they already understand.

The goal: infrastructure that enables rather than constrains data-driven development, with enterprise governance that provides safety without sacrificing velocity.

Next up:

Ready to get started with Lenses?

Download free