Yiannis Glampedakis

Lenses magnified: Enhanced, secure, self-serve developer experience for Kafka

Data access auditing, topic creation rules and bad records visibility to boost your confidence in Kafka.

Yiannis Glampedakis

In our world of streaming applications, developers are forever climbing a steep learning curve to stay successful with technologies such as Apache Kafka.

There is no end to the debt and the detail you need to manage when it comes to Kafka - and particularly since it doesn’t come with guardrails to help you out, the stakes for making mistakes are high.

In the worst case scenario, one error in a command or conf could see your cluster go down, partitions rebalance, and your services going offline.

In an ideal scenario, developers would have great self-service control over Kafka without compromising platform availability, going rogue with data hygiene or stumbling over governance tripwires.

At Lenses.io, it is always our goal to abstract this and make Kafka easier to operate on every level - and this release is no different. This time we hope this helps you answer questions like:

How can I approve certain Topic workflows in Kafka?

Does every developer across the organization need to know the semantics of replication factor, retention size and number of partitions to avoid bringing down a cluster when creating Topics?

How can I see at a glance which partitions have been scanned, why a query was terminated, and whether we have reached our limit?

How can I understand who has accessed what data in Kafka?

Such questions led to our latest release (4.3) where Lenses enhances your everyday workflows with self-service topic creation, deeper data visibility, handling bad records, auditing data access and more.

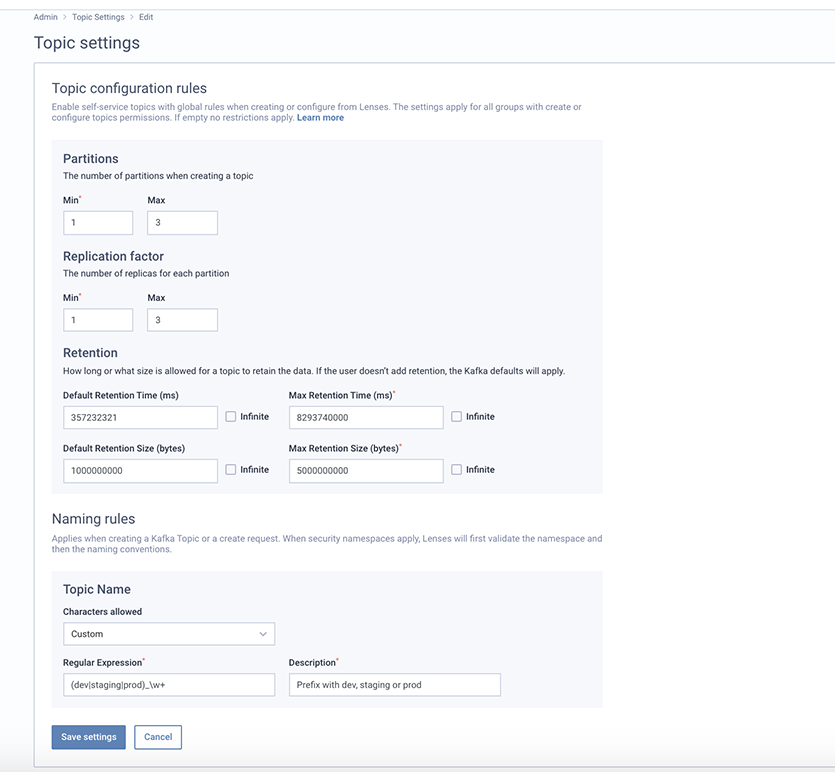

When creating topics, there are a few things you need to control to prevent your streaming applications from going south: things like

Data retention (do you really need to retain data in this topic for 99999999999 ms?)

Number of partitions (did you just throw a dice before entering this value?) and

Naming-convention of the topic name itself (is “fifi” the name of your application or name of your cat?)

Lack of control on these things can actually fail your entire Kafka project and leaves you no choice but to not allow developers to create their own topics. We often see this: developers waiting several weeks to have a topic created for them.

In Lenses 4.0 we introduced topic creation workflows. Now in 4.3 we are introducing topic policies which offer self-service topic creation whilst protecting your data platform health and hygiene.

A platform administrator can now set policies for the number of possible partitions, retention policy and best of all, apply rules based on regular expressions to force Topic names to follow a naming convention.

Kafka admins get valuable time back, by enabling topic creation rules that globally apply for any user and group, without having to curate every single topic request or building this tooling themselves, resulting in less heavy lifting.

Querying data from a Kafka topic with Lenses is now as easy as querying your relational database.

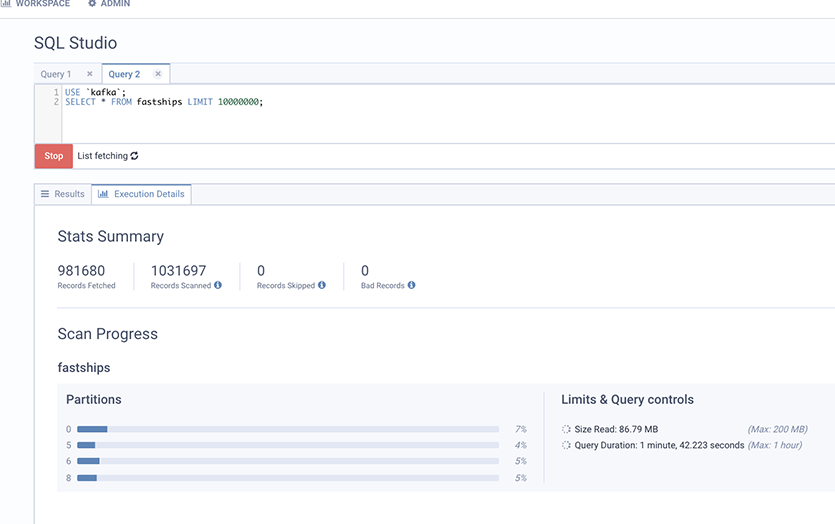

While this has been the case, with 4.3 we are giving a behind the scenes view of your queries. Querying a topic with millions of records can be an expensive operation that can take from seconds to hours.

Lenses can now display information about the scanning process, identify that a query is taking too long, and suggest ways to improve your statement.

In addition to that, records that cannot be deserialized or contain messages that are not following the known schema are now tracked and their metadata are visible to the user. So if a record is not rendered in the results and skipped because it’s corrupted, you’ll know about its partition, offset and timestamp.

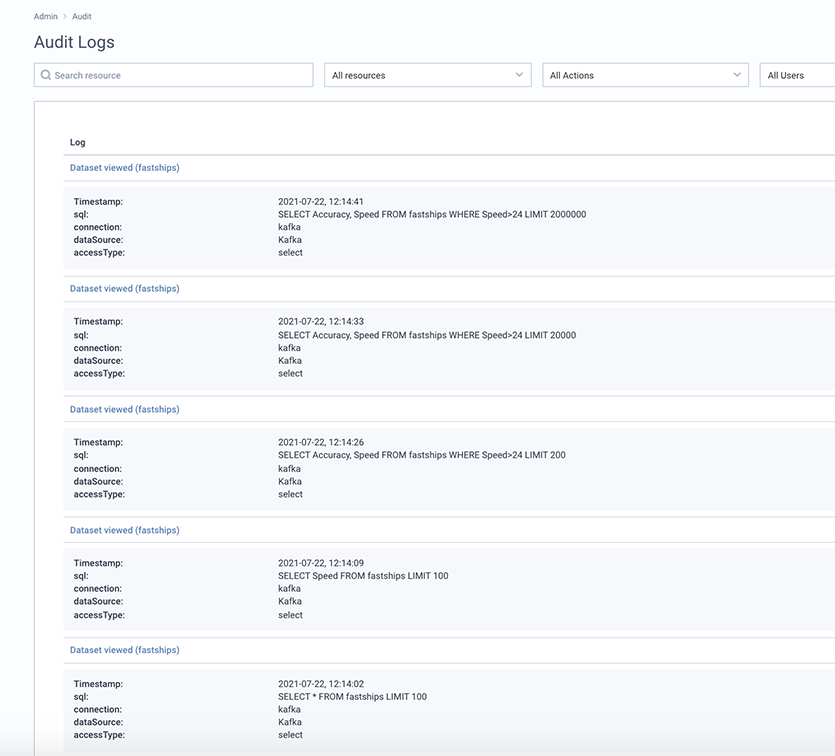

Compliance and security operations teams now not only have visibility into data platform operations but also audits on all user data access. Every time someone queries a dataset, an audit trail will be generated showing the user and the query that was invoked.

This level of auditing is often required for GDPR and HIPAA compliance.

But it also means you can start giving a freer rein, trust and responsibility to teams developing on Kafka, knowing that there will also be an audit trail of every dataset they query.

Now you can see who, when and how someone has accessed your data and together with our Audit integrations, push them to Splunk or through a WebHook to your preferred platform.

We hope you enjoy these new Lenses capabilities, and more.

Take a look at the full 4.3 release notes and keep an eye on the blog for more detailed walkthroughs of these features.

Ready to get started with Lenses?

Download free