Andrew Stevenson

Topic & data multi-Kafka governance with your AI-assistant

Lenses MCP Server brings 100x productivity gains on governance with Apache Kafka

Andrew Stevenson

If you’ve been running Kafka for a while, with any luck you have quite a few engineering teams onboarded, potentially with hundreds or even thousands of applications.

Hopefully the Lenses.io Developer Experience platform helped in this adoption.

But finding the right balance between governance and openness can be tricky.

But unless you have the governance of Ned Flanders, you may have yourself a little Kafka hygiene problem…

This blog explains and gives an example of how the Lenses MCP connected to your AI-Assistants and Agents brings in a new era of Kafka platform governance.

Let’s take an example with Topics. In many organizations there may be a flexible policy for developers to create Kafka topics.

Some types (eg. low number of partitions and certain retention) may go through without approvals, others may go through approvals.

But either way, over time, you end up with a graveyard of unknowns:

Topics that haven’t been written to in months (but are still consuming storage and Confluent credits)

Topics with large number of partitions but low number of consumer instances

Topics with no owners, no tags, no descriptions

Topics whose schemas don’t match their actual payloads

Topics that may (or may not) contain sensitive data

And the kicker? It is difficult to tell which topics matter without going on a manual deep dive through clusters and schemas.

For large Kafka estates this becomes unmanageable.

Kafka housekeeping could easily be a full-time job for several people. In most cases, it simply doesn’t get done. The result?

High cloud costs from stale data or over partitioned topics

Performance problems due to misconfiguration of topics and applications

Compliance risks by sensitive data being exposed and unprotected

Low productivity by data being unclassified

Hopefully, you’re using Lenses.io as your Developer Experience for Kafka and data. This has multi-Kafka capabilities that unifies your Kafka assets across dozens/hundreds of clusters, even if they are of different distributions/vendors.

The Global Data Catalog for example allows engineers to find/explore topics and classify them with metadata (descriptions, tags, data policies). Great!

If you’re not using Lenses though, you may have open source tools and scripts which have no cataloging features at all.

But even when using Lenses, it may still require the discipline for an engineer to classify the data. Or clean-up a topic if not being used.

Identifying and classifying data may require looking inside the topic, as well as the schema.

Perfectly fine to do, but can be labour intensive if you’re doing it across hundreds or even thousands of topics. And let’s be honest, most of the time it’s just not done.

This work is a drag.

This is where things get very interesting.

The Lenses.io MCP Server brings Kafka into the world of natural language interaction, intelligence and automation.

Built on the Model Context Protocol (MCP), it allows engineers to query their Kafka clusters and Kafka assets (topics, schemas, ACLs, Quotas, Apps, ..) directly from any tool-compatible AI Chatbot or IDEs like VS Code, Claude Code, Cursor, or IntelliJ.

Everything you can do in the Lenses UI or API, you can do via the Lenses MCP except on steroids and with the intelligence of an LLM to boot.

Imagine having a Kafka-aware AI Agent that has safe (and audited) access to your Kafka environments, of any vendor. And its intelligence understands the streaming paradigm (ie. what Kafka configs should be set to, what’s a good partitioning strategy, etc.).

Instead of scripting or clicking, you describe your intent in your AI Agent and the Agent does the heavy lifting whilst you switch your context to something more interesting.

Let’s walk through a scenario most data platform engineers will recognize: Describing and categorizing topics and automating creating data policies.

The walkthrough will demonstrate something that could take weeks/months to do in a real environment (or even not at all), that will be done in a couple of minutes with an AI Agent and Lenses MCP.

To make the environment as-representative of a real environment as possible, I’m going to include topic data of different serialisations: XML, AVRO and JSON data.

I’ve configured my Anthropic Claude client to the Lenses MCP. I’m also using Context7 to ensure my assistant has access to the Lenses documentation. For Context7, create a API token and paste it into the configuration.

Lenses MCP requires having a Lenses service account (LENSES_API_KEY) on the Lenses HQ. With OAuth2 support coming soon. This will require an IAM Role to be created with the necessary permissions. Such as:

In this workflow, I’m using the free Lenses Community Edition, deployed on my local Docker and comes pre-packaged with a localhost Kafka cluster. I’ve created some producers to inject different types of data into different topics.

If you want insert data manually, you can do this from the SQL Studio with the commands:

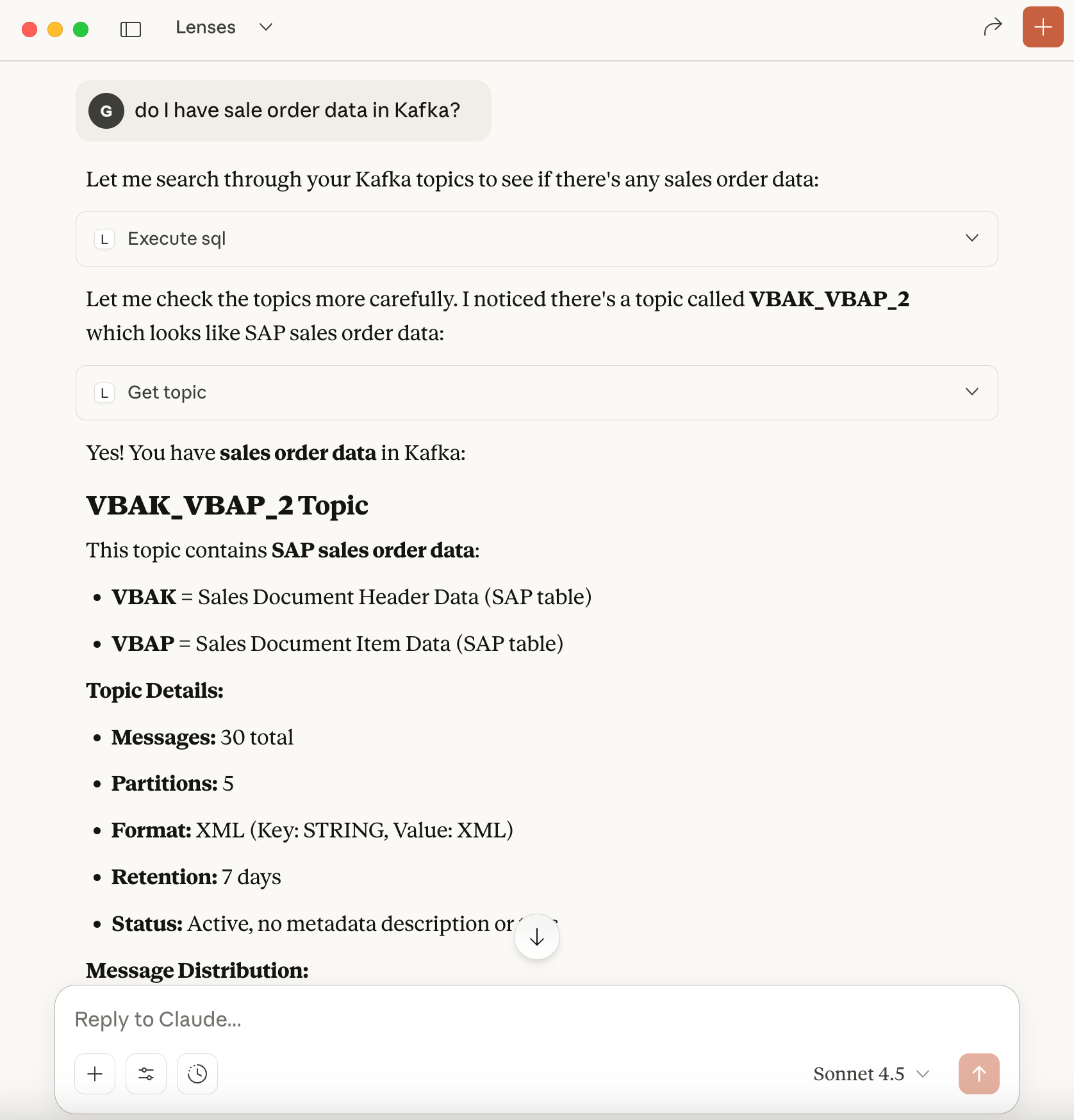

To show the full power of Lenses and MCP, let’s inject some Sales Order data into this topic. I have purposely named the topic after an SAP table.

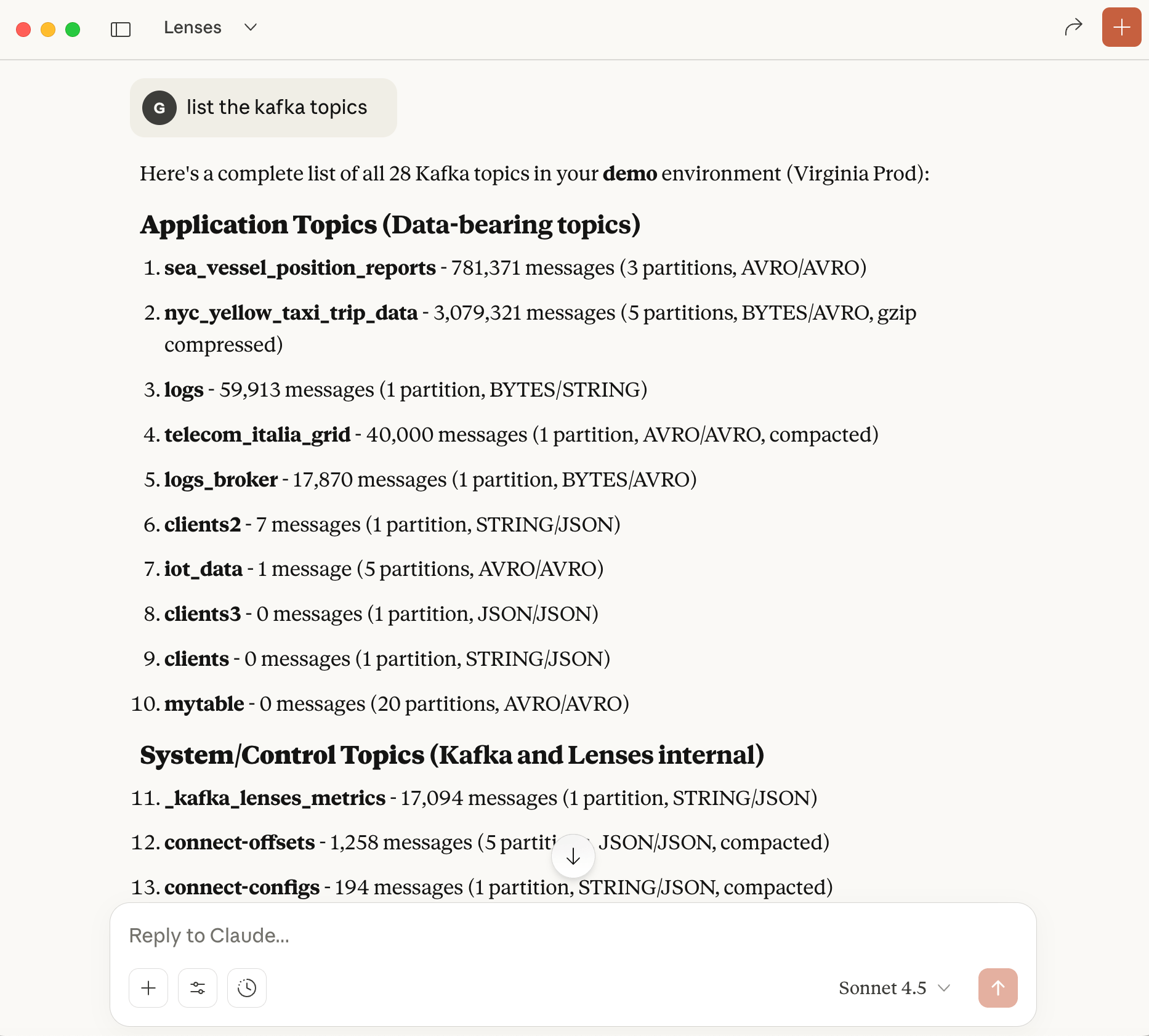

It's useful to start your first prompt to ensure Claude or the AI-assistant can see the different Kafka environments and is correctly connected to your Lenses HQ.

List environments

In the second prompt, reference Context7. This will avoid to reference it again. Such as:

List the Kafka topics. use Context7

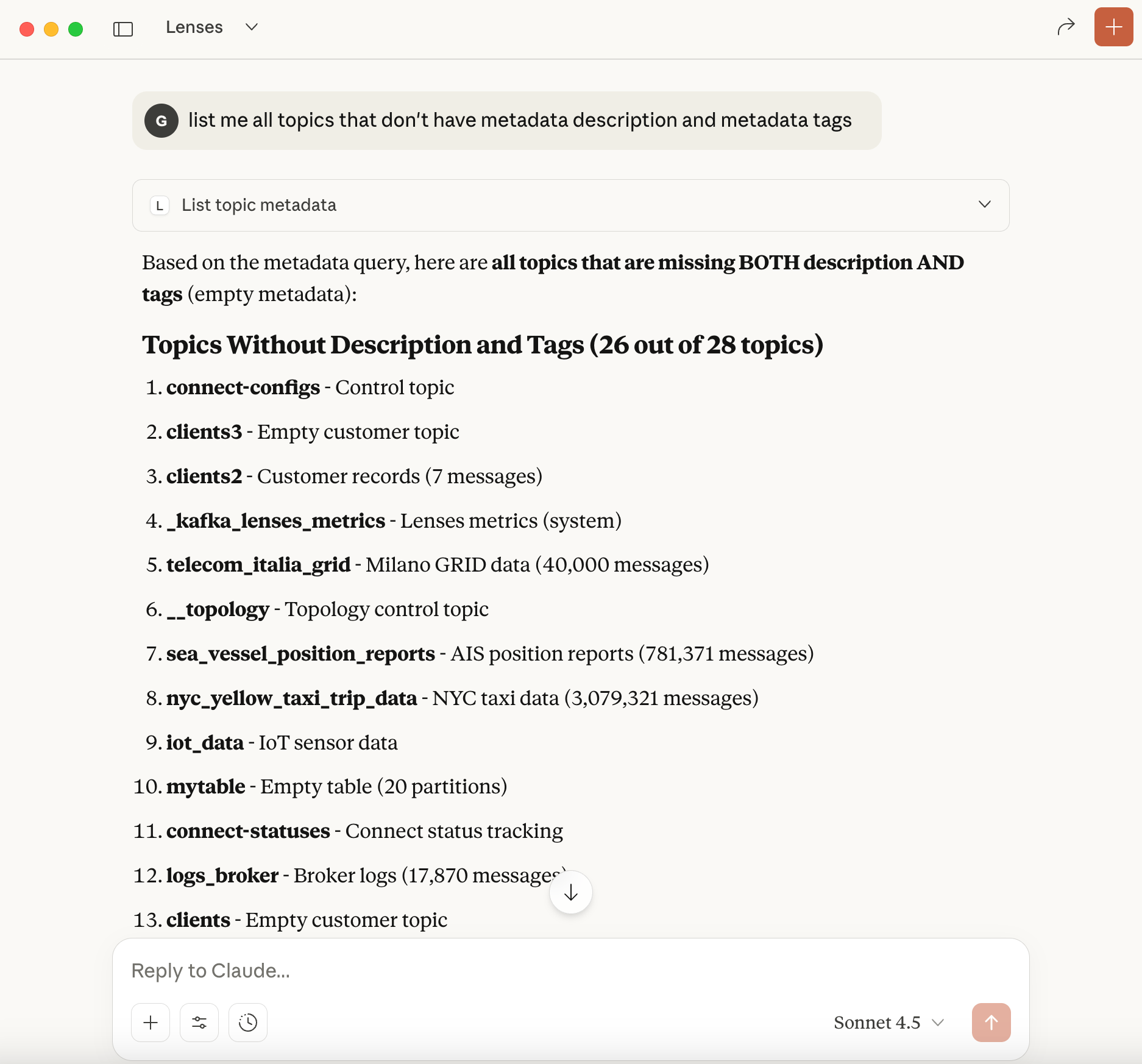

list all topics that don't have metadata description and metadata tags

do I have sales order data in Kafka?

The AI is able to find Sales Order by identifying a topic name that is commonly associated with an SAP table of Sales Orders. Amazing!

Let’s go further and ask Claude to describe & classify all topics by exploring the schema and a sample of payload events.

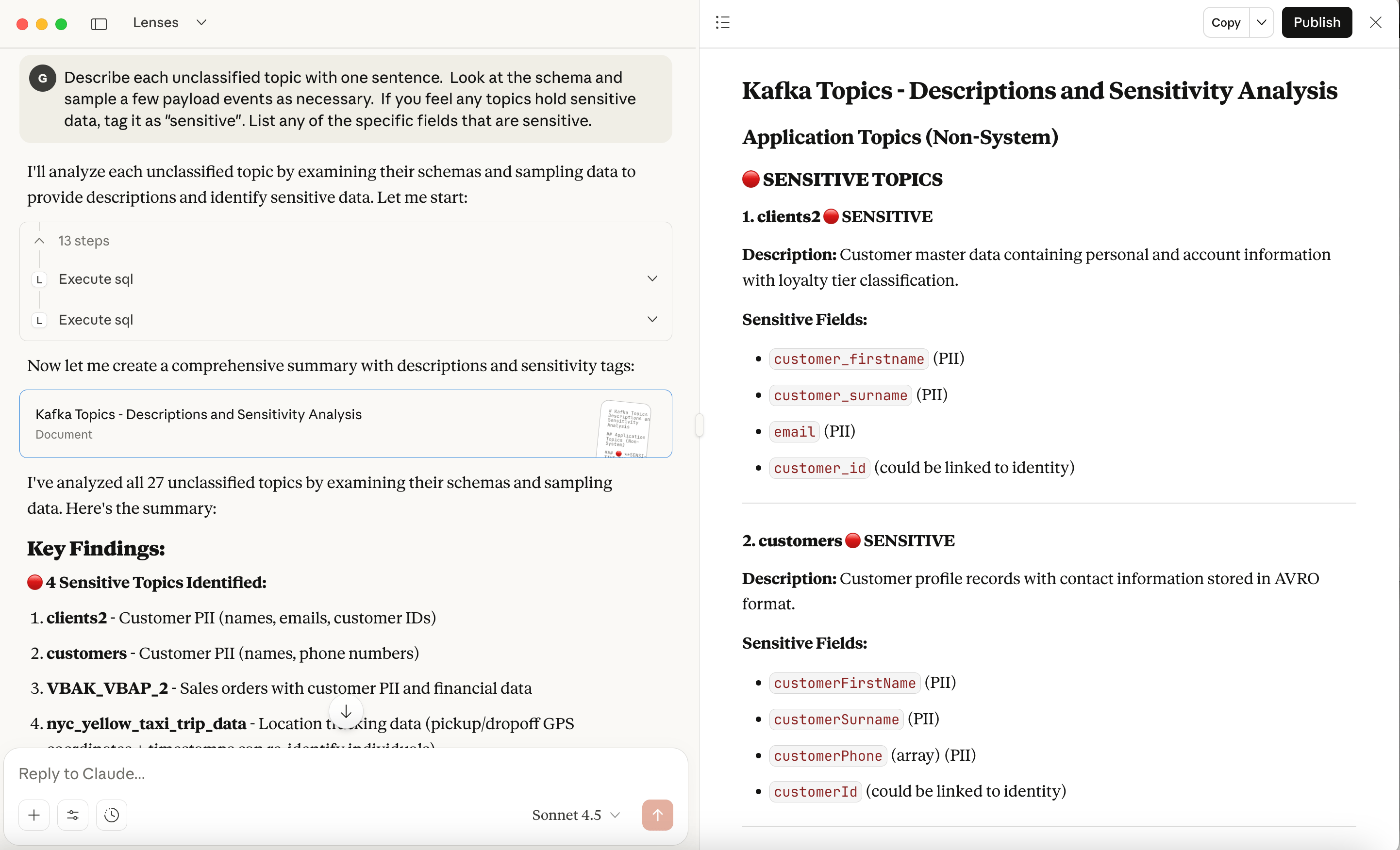

Describe each unclassified topic with one sentence. Look at the schema and sample a few payload events as necessary. If you feel any topics hold sensitive data, tag it as "sensitive". List any of the specific fields that are sensitive.

This task alone may have taken weeks or even months if done manually.

But of course, the power of AI Agents and MCP isn’t just to return information, but to automate tasks.

Let’s turbo charge our governance by updating the metadata with the results of this analysis and putting in data protection:

Based on your analysis and report: 1. Update the metadata description of each topic. 2. Tag each topic as "sensitive" for any topics that have sensitive information

Good governance & Kafka hygiene has always been a grind. Most teams either ignore it until audit time and then spend weeks if not months catching up. Meanwhile, it impacts costs, developer productivity and compliance posture.

Introducing AI assistance into data operations revolutionises data governance.

It can:

Turn hours of discovery into seconds

Continuously improve data quality and governance

Prevent compliance gaps before they happen

Save thousands in infrastructure costs by identifying unused topics

AI isn’t just automating tasks, it’s powered by the AI’s intelligence and knowledge of managing Kafka (including its best practices and configurations)

Keeping your clusters clean, compliant, and efficient shouldn’t require a small army of engineers. We demonstrated how AI-Assistants connected to Lenses via MCP drastically simplifies and accelerates governing a large Kafka estate.

Great Kafka governance is critical if you want to keep your Kafka costs down, ensure compliance and to drive engineering adoption. Learn more about Lenses MCP and Lenses Agents or see the docs for Lenses MCP Servers.

New Era in AI Enablement for Streaming App Development...

Tun Shwe

Ready to get started with Lenses?

Download free