Christina Daskalaki

Lenses 1.1 Release

Lenses 1.1 Release offers the first Apache Kafka Topology View, where you can see the entire data flows over Apache Kafka, Kubernetes Deployment and many more.

Christina Daskalaki

We are super excited to announce the new Lenses release v1.1 !

Lenses is a streaming platform for Apache Kafka which supports the core elements of Kafka, vital enterprise features and a rich web interface to simplify your Kafka development and operations. Lenses also ships with a free single broker development environment which provides a pre-setup Kafka environment with connectors and examples for your local development.

Since November’s release, Lenses has been widely adopted and we would like to thank you all for your valuable feedback which we have taken into account as part of this release.

Let’s see what’s new!

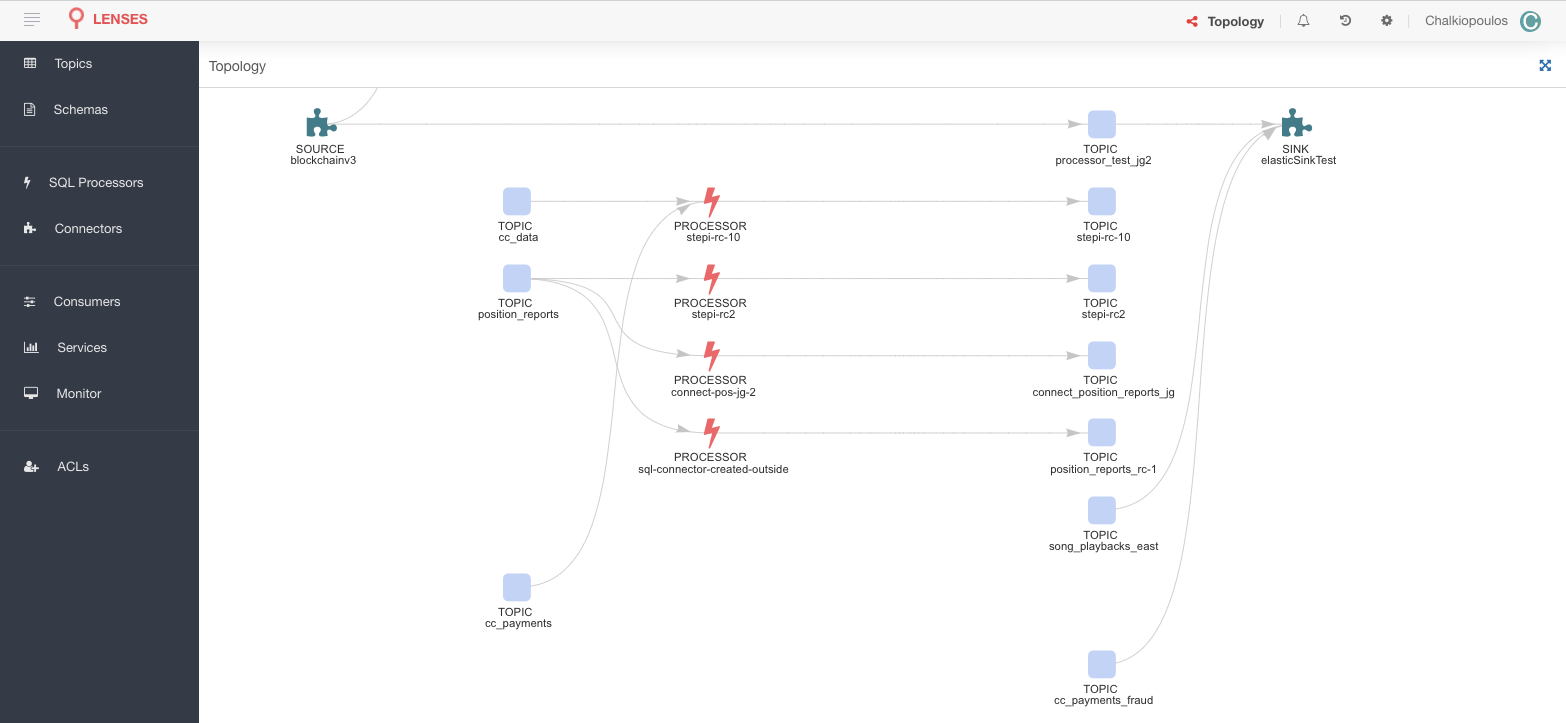

We are introducing the first Apache Kafka Topology View, where you can see the entire data flows over Apache Kafka via Lenses.

Built for Apache Kafka data flows

Visual ETL data journeys

Interactive Nodes: connectors, processors, topics

Lenses SQL support and visualization

Intuitive user experience

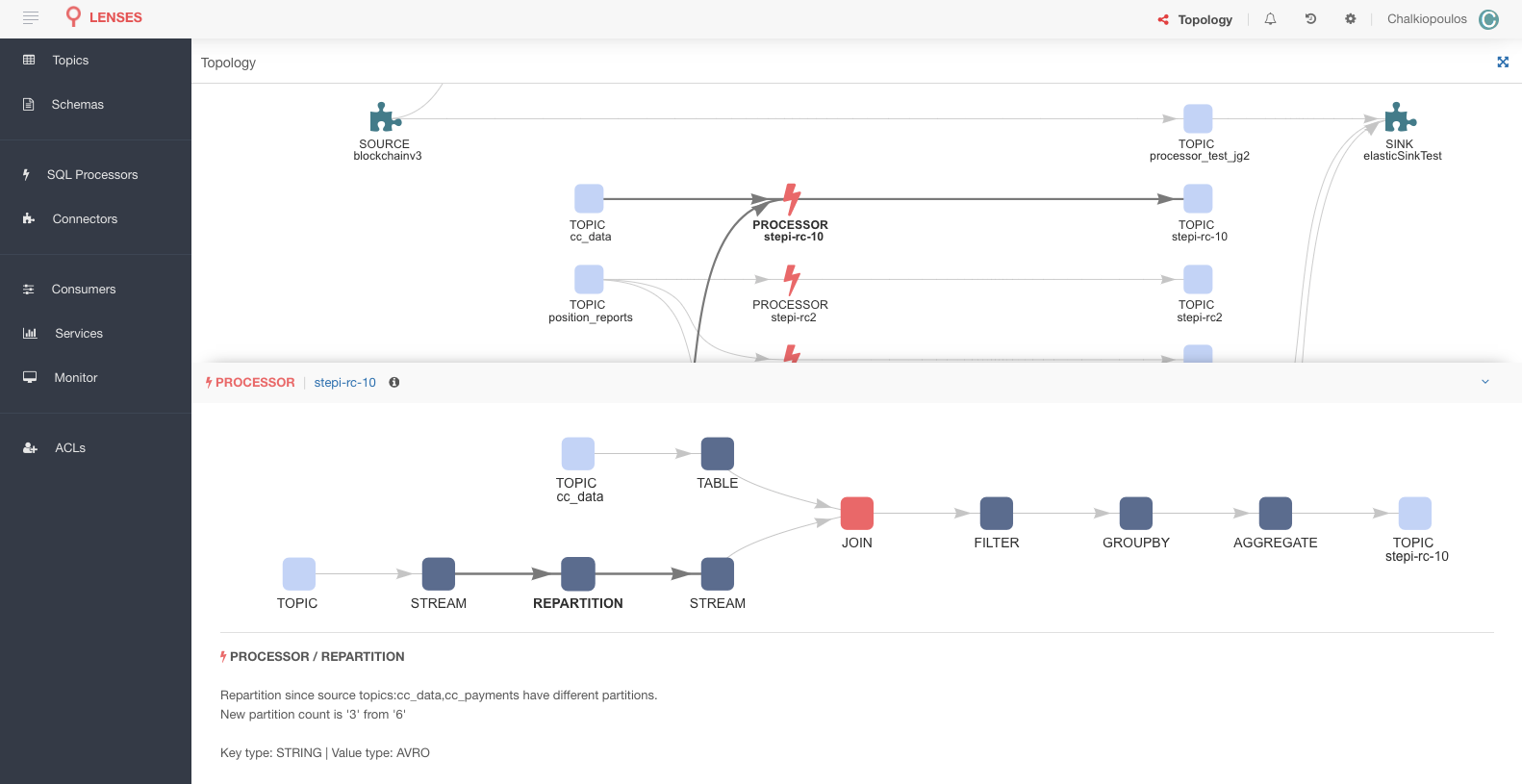

What you see above is the full topology view displaying sinks and sources, SQL processors and the related Kafka topics. You can interact with each node in the graph to get more details of that processing step -see it in the image below. Hence you can better track the journey of your data. We can’t stress enough the benefits of this.

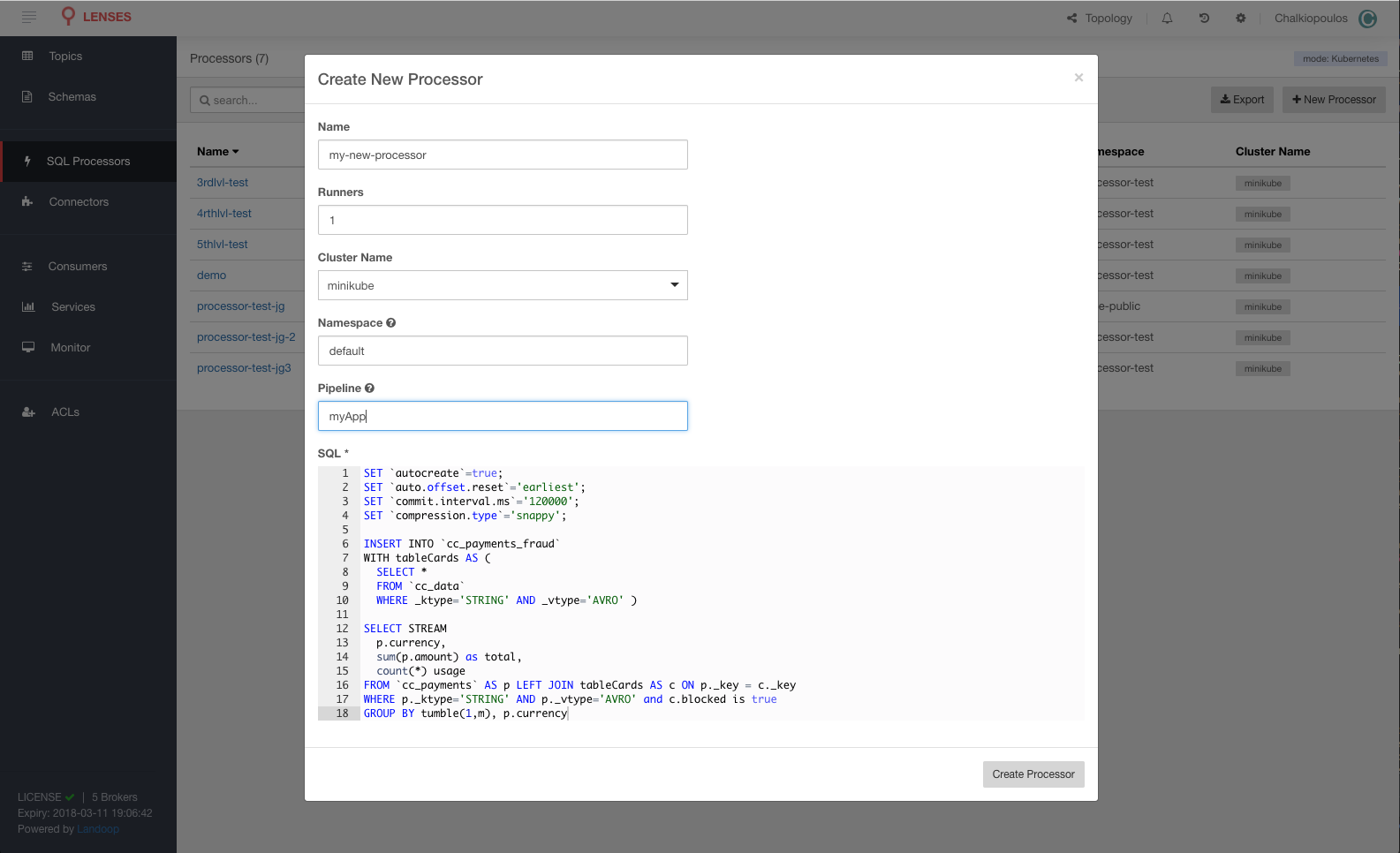

for Lenses SQL Processors is fast, easy and scalable Lenses is the first and only platform that allows you to deploy, manage and monitor SQL processors on Kafka in Kubernetes. Scale out Lenses SQL Processors via Kubernetes is now only few clicks away with the new ui support!

What is Lenses SQL Processors?

Is our generic term for processing Apache Kafka streaming data. They are Kafka Streams apps which have been coded via Lenses SQL and benefits from the enterprise grade deployment, execution and fault tolerance delivery.

Build and deploy Kafka stream apps with Lenses SQL Processors straight to Kubernetes

Visual Runners, state management and pipeline

Multiple cluster and namespace support

Lenses supports native Kubernetes deployments for Lenses SQL Processors.

In this version you can create, scale up and down processor runners from the UI; each runner is allocated to a Kubernetes pod.

Of course you can still pause or resume the processors.

The new version supports multiple Kubernetes clusters and you can specify the Namespace to better organize your pods and

Pipeline which acts as an additional label to be attached to the metrics of the pods.

If you are using our Operational Monitoring solution the pipeline label will also

be attached to your JMX metrics.

In case you don’t use Kubernetes, Lenses also supports scalability via Kafka Connect, part of the Apache Kafka distribution. Lenses uses the connect workers in order to distribute the Lenses SQL execution.

The purpose of Lenses is to simplify the way you build streaming applications for Apache Kafka. Our powerful Lenses SQL engine allows you to run batch data or stream queries straight to Lenses UI or via our WebSocket endpoints. We have seen that developers often build frontend applications that utilize the data in their Kafka Cluster. For this reason we have built a Redux middleware that wraps all the major actions required to publish and subscribe to Kafka topics.

To connect your ReduxJS/Redux web application to live Apache Kafka data have a look at the redux-lenses-streaming library. Follow this blog post to see how to produce and subscribe to Kafka data in your Javascript app.

The library is published on npm, so in order to utilize it you only need to npm i --save redux-lenses-streaming rxjs

and follow the documentation

to connect your app to Lenses.

By using the library you can fire Lenses SQL queries that may filter the data you are subscribing, specify the deserializers and create custom views of your data or your analytics.

The Lenses SQL engine can now handle ENUM values in Avro messages, allows pushing-down configuration to underlying RocksDb,

provides fixes in the min aggregation and applies a set of performance improvements. It brings also improvement in the execution topology view.

The enterprise ready Lenses SQL engine, has been successfully evaluated in multiple production systems within Financial services, corporate environments and other industries. This release encapsulates many of the requests that we have received while working real use-cases in the field.

Security is always on top of our list and we are providing features that meet your enterprise requirements. In version 1.0 we introduced role based authentication and auditing for your Kafka operations. In this release we have improved the LDAP integration by introducing pluggable LDAP implementations. Both Active Directory and a variety of LDAP setups is now supported. We also enabled the client SSL certificates. If your Kafka cluster uses TLS certificates for authentication, you can set it up via the Lenses Configuration.

Download Lenses now:

If you are running the Lenses Development Environment, don’t forget to docker pull to get the latest updates:

docker pull landoop/kafka-lenses-dev:latest

If you want to connect Lenses to your cluster contact us now for a demo and trial!

Meet the SRE AI Agent for Apache Kafka....

Andrew Stevenson

Ready to get started with Lenses?

Download free